Prometheus源码安装监控kubeenetes集群

注意:Prometheus-operator 已经改名为 Kube-promethues

系统参数:

- Prometheus Operator版本: (Prometheus Operator更名为Kube-Prometheus,本文使用版本为:0.8.0 )

- Kubernetes 版本: 1.20.0

推荐手动下来源码安装,不推荐 helm 方式,因为很多东西需要手动配置进行改动

简介

Kubernetes Operator 介绍

在 Kubernetes 的支持下,管理和伸缩 Web 应用、移动应用后端以及 API 服务都变得比较简单了。其原因是这些应用一般都是无状态的,所以 Deployment 这样的基础Kubernetes API 对象就可以在无需附加操作的情况下,对应用进行伸缩和故障恢复了

而对于数据库、缓存或者监控系统等有状态应用的管理,就是个挑战了。这些系统需要应用领域的知识,来正确的进行伸缩和升级,当数据丢失或不可用的时候,要进行有效的重新配置。我们希望这些应用相关的运维技能可以编码到软件之中,从而借助 Kubernetes 的能力,正确的运行和管理复杂应用

Operator 这种软件,使用 TPR(第三方资源,现在已经升级为 CRD) 机制对 Kubernetes API 进行扩展,将特定应用的知识融入其中,让用户可以创建、配置和管理应用。和 Kubernetes 的内置资源一样,Operator 操作的不是一个单实例应用,而是集群范围内的多实例

Prometheus Operator 介绍

Kubernetes 的 Prometheus Operator 为 Kubernetes 服务和 Prometheus 实例的部署和管理提供了简单的监控定义

安装完毕后,Prometheus Operator提供了以下功能:

- 创建/毁坏: 在 Kubernetes namespace 中更容易启动一个 Prometheus 实例,一个特定的应用程序或团队更容易使用Operator

- 简单配置: 配置 Prometheus 的基础东西,比如在 Kubernetes 的本地资源 versions, persistence, retention policies, 和 replicas

- Target Services 通过标签: 基于常见的Kubernetes label查询,自动生成监控target 配置;不需要学习普罗米修斯特定的配置语言

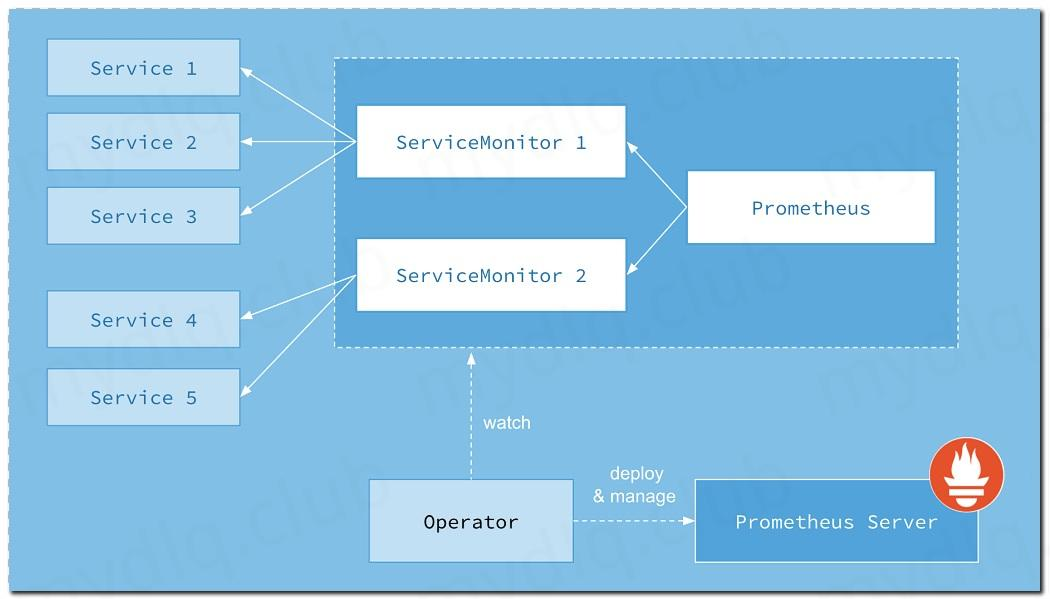

Prometheus Operator 系统架构图

- Operator: Operator 资源会根据自定义资源(Custom Resource Definition / CRDs)来部署和管理 Prometheus Server,同时监控这些自定义资源事件的变化来做相应的处理,是整个系统的控制中心

- Prometheus: Prometheus 资源是声明性地描述 Prometheus 部署的期望状态。

- Prometheus Server: Operator 根据自定义资源 Prometheus 类型中定义的内容而部署的 Prometheus Server 集群,这些自定义资源可以看作是用来管理 Prometheus Server 集群的 StatefulSets 资源

- ServiceMonitor: ServiceMonitor 也是一个自定义资源,它描述了一组被 Prometheus 监控的 targets 列表。该资源通过 Labels 来选取对应的 - Service Endpoint,让 Prometheus Server 通过选取的 Service 来获取 Metrics 信息

- Service: Service 资源主要用来对应 Kubernetes 集群中的 Metrics Server Pod,来提供给 ServiceMonitor 选取让 Prometheus Server 来获取信息。简单的说就是 Prometheus 监控的对象,例如 Node Exporter Service、Mysql Exporter Service 等等

- Alertmanager: Alertmanager 也是一个自定义资源类型,由 Operator 根据资源描述内容来部署 Alertmanager 集群

拉取 Prometheus Operator

先从Github上将源码拉取下来,利用源码项目已经写好的 kubernetes 的 yaml 文件进行一系列集成镜像的安装,如 grafana、prometheus 等等

从 GitHub 拉取 Prometheus Operator 源码

[root@k8s01 deploy]# wget https://github.com/coreos/kube-prometheus/archive/v0.8.0.tar.gz

解压

[root@k8s01 deploy]# tar xf kube-prometheus-0.8.0.tar.gz

[root@k8s01 deploy]# cd kube-prometheus-0.8.0/

[root@k8s01 kube-prometheus-0.8.0]# ls

build.sh docs experimental hack jsonnetfile.lock.json Makefile README.md tests

code-of-conduct.md example.jsonnet go.mod jsonnet kustomization.yaml manifests scripts test.sh

DCO examples go.sum jsonnetfile.json LICENSE NOTICE sync-to-internal-registry.jsonnet

进行文件分类

由于它的文件都存放在项目源码的 manifests 文件夹下,所以需要进入其中进行启动这些 kubernetes 应用 yaml 文件。又由于这些文件堆放在一起,不利于分类启动,所以这里将它们分类

进入源码的 manifests 文件夹

[root@k8s01 kube-prometheus-0.8.0]# cd manifests/

[root@k8s01 manifests]#

创建文件夹并且将 yaml 文件分类

# 创建文件夹

[root@k8s01 manifests]# mkdir -p operator node-exporter alertmanager grafana kube-state-metrics prometheus serviceMonitor adapter

# 移动 yaml 文件,进行分类到各个文件夹下

[root@k8s01 manifests]# mv *-serviceMonitor* serviceMonitor/

[root@k8s01 manifests]# mv prometheus-operator* operator/

[root@k8s01 manifests]# mv grafana-* grafana/

[root@k8s01 manifests]# mv kube-state-metrics-* kube-state-metrics/

[root@k8s01 manifests]# mv alertmanager-* alertmanager/

[root@k8s01 manifests]# mv node-exporter-* node-exporter/

[root@k8s01 manifests]# mv prometheus-adapter* adapter/

[root@k8s01 manifests]# mv prometheus-* prometheus/

[root@k8s01 manifests]# mv kube-prometheus-prometheusRule.yaml kubernetes-prometheusRule.yaml prometheus/

基本目录结构如下:

[root@k8s01 manifests]# tree

.

├── adapter

│ ├── prometheus-adapter-apiService.yaml

│ ├── prometheus-adapter-clusterRoleAggregatedMetricsReader.yaml

│ ├── prometheus-adapter-clusterRoleBindingDelegator.yaml

│ ├── prometheus-adapter-clusterRoleBinding.yaml

│ ├── prometheus-adapter-clusterRoleServerResources.yaml

│ ├── prometheus-adapter-clusterRole.yaml

│ ├── prometheus-adapter-configMap.yaml

│ ├── prometheus-adapter-deployment.yaml

│ ├── prometheus-adapter-roleBindingAuthReader.yaml

│ ├── prometheus-adapter-serviceAccount.yaml

│ └── prometheus-adapter-service.yaml

├── alertmanager

│ ├── alertmanager-alertmanager.yaml

│ ├── alertmanager-podDisruptionBudget.yaml

│ ├── alertmanager-prometheusRule.yaml

│ ├── alertmanager-secret.yaml

│ ├── alertmanager-serviceAccount.yaml

│ └── alertmanager-service.yaml

├── blackbox-exporter-clusterRoleBinding.yaml

├── blackbox-exporter-clusterRole.yaml

├── blackbox-exporter-configuration.yaml

├── blackbox-exporter-deployment.yaml

├── blackbox-exporter-serviceAccount.yaml

├── blackbox-exporter-service.yaml

├── grafana

│ ├── grafana-dashboardDatasources.yaml

│ ├── grafana-dashboardDefinitions.yaml

│ ├── grafana-dashboardSources.yaml

│ ├── grafana-deployment.yaml

│ ├── grafana-serviceAccount.yaml

│ └── grafana-service.yaml

├── kube-state-metrics

│ ├── kube-state-metrics-clusterRoleBinding.yaml

│ ├── kube-state-metrics-clusterRole.yaml

│ ├── kube-state-metrics-deployment.yaml

│ ├── kube-state-metrics-prometheusRule.yaml

│ ├── kube-state-metrics-serviceAccount.yaml

│ └── kube-state-metrics-service.yaml

├── node-exporter

│ ├── node-exporter-clusterRoleBinding.yaml

│ ├── node-exporter-clusterRole.yaml

│ ├── node-exporter-daemonset.yaml

│ ├── node-exporter-prometheusRule.yaml

│ ├── node-exporter-serviceAccount.yaml

│ └── node-exporter-service.yaml

├── operator

│ └── prometheus-operator-prometheusRule.yaml

├── prometheus

│ ├── kube-prometheus-prometheusRule.yaml

│ ├── kubernetes-prometheusRule.yaml

│ ├── prometheus-clusterRoleBinding.yaml

│ ├── prometheus-clusterRole.yaml

│ ├── prometheus-podDisruptionBudget.yaml

│ ├── prometheus-prometheusRule.yaml

│ ├── prometheus-prometheus.yaml

│ ├── prometheus-roleBindingConfig.yaml

│ ├── prometheus-roleBindingSpecificNamespaces.yaml

│ ├── prometheus-roleConfig.yaml

│ ├── prometheus-roleSpecificNamespaces.yaml

│ ├── prometheus-serviceAccount.yaml

│ └── prometheus-service.yaml

├── serviceMonitor

│ ├── alertmanager-serviceMonitor.yaml

│ ├── blackbox-exporter-serviceMonitor.yaml

│ ├── grafana-serviceMonitor.yaml

│ ├── kubernetes-serviceMonitorApiserver.yaml

│ ├── kubernetes-serviceMonitorCoreDNS.yaml

│ ├── kubernetes-serviceMonitorKubeControllerManager.yaml

│ ├── kubernetes-serviceMonitorKubelet.yaml

│ ├── kubernetes-serviceMonitorKubeScheduler.yaml

│ ├── kube-state-metrics-serviceMonitor.yaml

│ ├── node-exporter-serviceMonitor.yaml

│ ├── prometheus-adapter-serviceMonitor.yaml

│ ├── prometheus-operator-serviceMonitor.yaml

│ └── prometheus-serviceMonitor.yaml

└── setup

├── 0namespace-namespace.yaml

├── prometheus-operator-0alertmanagerConfigCustomResourceDefinition.yaml

├── prometheus-operator-0alertmanagerCustomResourceDefinition.yaml

├── prometheus-operator-0podmonitorCustomResourceDefinition.yaml

├── prometheus-operator-0probeCustomResourceDefinition.yaml

├── prometheus-operator-0prometheusCustomResourceDefinition.yaml

├── prometheus-operator-0prometheusruleCustomResourceDefinition.yaml

├── prometheus-operator-0servicemonitorCustomResourceDefinition.yaml

├── prometheus-operator-0thanosrulerCustomResourceDefinition.yaml

├── prometheus-operator-clusterRoleBinding.yaml

├── prometheus-operator-clusterRole.yaml

├── prometheus-operator-deployment.yaml

├── prometheus-operator-serviceAccount.yaml

└── prometheus-operator-service.yaml

9 directories, 82 files

修改源码 yaml 文件

由于这些 yaml 文件中设置的应用镜像国内无法拉取下来,所以修改源码中的这些 yaml 的镜像设置,替换镜像地址方便拉取安装。再之后因为需要将 Grafana & Prometheus & Alertmanager 通过 NodePort 方式暴露出去,所以也需要修改这两个应用的 service 文件

镜像修改本文省略

修改 Service 端口设置

修改 Prometheus Service

修改 prometheus-service.yaml 文件

[root@k8s01 manifests]# vim prometheus/prometheus-service.yaml

#修改prometheus Service端口类型为NodePort,设置nodePort端口为30089

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30089

selector:

app: prometheus

prometheus: k8s

sessionAffinity: ClientIP

修改 Grafana Service

修改 grafana-service.yaml 文件

[root@k8s01 manifests]# vim grafana/grafana-service.yaml

#修改garafana Service端口类型为NodePort,设置nodePort端口为30088

apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30088

selector:

app: grafana

修改 Alertmanager Service

修改 alertmanager-service.yaml 文件

[root@k8s01 manifests]# vim alertmanager/alertmanager-service.yaml

#修改Alertmanager Service端口类型为NodePort,设置nodePort端口为30093

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30093

selector:

alertmanager: main

app: alertmanager

sessionAffinity: ClientIP

修改数据持久化存储

prometheus 实际上是通过 emptyDir 进行挂载的,我们知道 emptyDir 挂载的数据的生命周期和 Pod 生命周期一致的,如果 Pod 挂掉了,那么数据也就丢失了,这也就是为什么我们重建 Pod 后之前的数据就没有了的原因,所以这里修改它的持久化配置

修改 Prometheus 持久化

修改 prometheus-prometheus.yaml 文件

[root@k8s01 manifests]# vim prometheus/prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.26.0

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

externalLabels: {}

image: quay.io/prometheus/prometheus:v2.26.0

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.26.0

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

probeNamespaceSelector: {}

probeSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: 2.26.0

storage: #----添加持久化配置,指定StorageClass

volumeClaimTemplate:

spec:

storageClassName: managed-nfs-storage

resources:

requests:

storage: 50Gi

修改 Grafana 持久化配置

创建 grafana-pvc.yaml 文件

由于 Grafana 是部署模式为 Deployment,所以我们提前为其创建一个 grafana-pvc.yaml 文件,加入下面 PVC 配置

[root@k8s01 manifests]# vim grafana/grafana-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: grafana

namespace: monitoring #---指定namespace为monitoring

spec:

storageClassName: managed-nfs-storage #---指定StorageClass

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

修改 grafana-deployment.yaml 文件设置持久化配置,应用上面的 PVC

[root@k8s01 manifests]# vim grafana/grafana-deployment.yaml

#将 volumes 里面的 “grafana-storage” 配置注掉,新增如下配置,挂载一个名为 grafana 的 PVC

......

volumes:

- name: grafana-storage #-------新增持久化配置

persistentVolumeClaim:

claimName: grafana #-------设置为创建的PVC名称

#- emptyDir: {} #-------注释掉旧的配置

# name: grafana-storage

- name: grafana-datasources

secret:

secretName: grafana-datasources

- configMap:

name: grafana-dashboards

name: grafana-dashboards

......

修改kube-state-metrics

修改镜像,不然会拉取失败

[root@k8s01 manifests]# vim kube-state-metrics/kube-state-metrics-deployment.yaml

...

- --telemetry-host=127.0.0.1

- --telemetry-port=8082

# image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:2.0.0

image: bitnami/kube-state-metrics:2.0.0

name: kube-state-metrics

...

更改 kubernetes配置与创建对应 Service

必须提前设置一些Kubernetes中的配置,否则kube-scheduler和kube-controller-manager无法监控到数据

修改 kube-scheduler 配置

编辑 /etc/kubernetes/manifests/kube-scheduler.yaml 文件

[root@k8s01 ~]# vim /etc/kubernetes/manifests/kube-scheduler.yaml

#将 command 的 bind-address 地址更改成 0.0.0.0

......

spec:

containers:

- command:

- kube-scheduler

- --bind-address=0.0.0.0 #改为0.0.0.0

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

......

修改 kube-controller-manager 配置

编辑 /etc/kubernetes/manifests/kube-controller-manager.yaml 文件

[root@k8s01 ~]# vim /etc/kubernetes/manifests/kube-controller-manager.yaml

#将 command 的 bind-address 地址更改成 0.0.0.0

spec:

containers:

- command:

- kube-controller-manager

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=0.0.0.0 #改为0.0.0.0

......

修改kube-controller和kube-scheduler的serviceMonitor

[root@k8s01 manifests]# vim serviceMonitor/kubernetes-serviceMonitorKubeScheduler.yaml

...

scheme: https

tlsConfig:

insecureSkipVerify: true

# jobLabel: app.kubernetes.io/name

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

# app.kubernetes.io/name: kube-scheduler

k8s-app: kube-scheduler

...

[root@k8s01 manifests]# vim serviceMonitor/kubernetes-serviceMonitorKubeControllerManager.yaml

...

scheme: https

tlsConfig:

insecureSkipVerify: true

# jobLabel: app.kubernetes.io/name

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

# app.kubernetes.io/name: kube-controller-manager

k8s-app: kube-controller-manager

创建kube-scheduler & controller-manager对应Service

因为 Prometheus Operator 配置监控对象 serviceMonitor 是根据 label 选取 Service 来进行监控关联的,而通过 Kuberadm 安装的 Kubernetes 集群只创建了 kube-scheduler & controller-manager 的 Pod 并没有创建 Service,所以 Prometheus Operator 无法这两个组件信息,这里我们收到创建一下这俩个组件的 Service

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-controller-manager

labels:

k8s-app: kube-controller-manager

spec:

selector:

component: kube-controller-manager

type: ClusterIP

clusterIP: None

ports:

- name: http-metrics

port: 10257

targetPort: 10257

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-scheduler

labels:

k8s-app: kube-scheduler

spec:

selector:

component: kube-scheduler

type: ClusterIP

clusterIP: None

ports:

- name: http-metrics

port: 10259

targetPort: 10259

protocol: TCP

[root@k8s01 manifests]# kubectl apply -f kube-scheduler-controller.yaml

service/kube-controller-manager created

service/kube-scheduler created

[root@k8s01 manifests]# kubectl get -n kube-system svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-controller-manager ClusterIP None <none> 10252/TCP 3s

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 41d

kube-scheduler ClusterIP None <none> 10251/TCP 3s

metrics-server ClusterIP 10.96.227.107 <none> 443/TCP 41d

如果是二进制部署还得创建对应的Endpoints对象将两个组件挂入到kubernetes集群内,然后通过 Service提供访问,才能让Prometheus 监控到

安装Prometheus Operator

所有文件都在 manifests 目录下执行

创建namespace及crd

[root@k8s01 manifests]# kubectl apply -f setup/

安装 Operator

[root@k8s01 manifests]# kubectl apply -f operator/

查看 Pod,等 pod 创建起来在进行下一步

[root@k8s01 manifests]# kubectl get -n monitoring po

NAME READY STATUS RESTARTS AGE

prometheus-operator-7775c66ccf-nctwk 2/2 Running 0 74s

安装其它组件

[root@k8s01 manifests]# kubectl apply -f adapter/

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io configured

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader configured

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

[root@k8s01 manifests]# kubectl apply -f alertmanager/

alertmanager.monitoring.coreos.com/main created

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

[root@k8s01 manifests]# kubectl apply -f node-exporter/

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

[root@k8s01 manifests]# kubectl apply -f kube-state-metrics/

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

[root@k8s01 manifests]# kubectl apply -f grafana/

secret/grafana-datasources created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-statefulset created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

persistentvolumeclaim/grafana created

service/grafana created

serviceaccount/grafana created

[root@k8s01 manifests]# kubectl apply -f prometheus/

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

[root@k8s01 manifests]# kubectl apply -f serviceMonitor/

servicemonitor.monitoring.coreos.com/alertmanager created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

servicemonitor.monitoring.coreos.com/grafana created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

servicemonitor.monitoring.coreos.com/node-exporter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

查看 Pod 状态

[root@k8s01 manifests]# kubectl get -n monitoring po

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 8m56s

alertmanager-main-1 2/2 Running 0 8m56s

alertmanager-main-2 2/2 Running 0 8m56s

grafana-747dd6fc85-khqx6 1/1 Running 0 8m15s

kube-state-metrics-6cb48468f8-z8b75 3/3 Running 0 2m48s

node-exporter-5xgww 2/2 Running 0 8m33s

node-exporter-q78qs 2/2 Running 0 8m33s

node-exporter-s7lf4 2/2 Running 0 8m33s

prometheus-adapter-59df95d9f5-srtb5 1/1 Running 0 9m8s

prometheus-adapter-59df95d9f5-ttwz8 1/1 Running 0 9m8s

prometheus-k8s-0 2/2 Running 1 7m46s

prometheus-k8s-1 2/2 Running 1 7m46s

prometheus-operator-7775c66ccf-nctwk 2/2 Running 0 11m

问题排错

上述部署过程中如遇到如下报错,可参考:https://github.com/helm/charts/issues/19928

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

Error: Internal error occurred: failed calling webhook "prometheusrulemutate.monitoring.coreos.com": Post https://prometheus-operator-operator.monitoring.svc:443/admission-prometheusrules/mutate?timeout=30s: no service port 'ƻ' found for service "prometheus-operator-operator"

#需要删除validatingwebhookconfigurations.admissionregistration.k8s.io和MutatingWebhookConfiguration

[root@k8s01 manifests]# kubectl get validatingwebhookconfigurations.admissionregistration.k8s.io

#删除所列出的所有

[root@k8s01 manifests]# kubectl get MutatingWebhookConfiguration

#删除所列出的所有

查看 Prometheus & Grafana

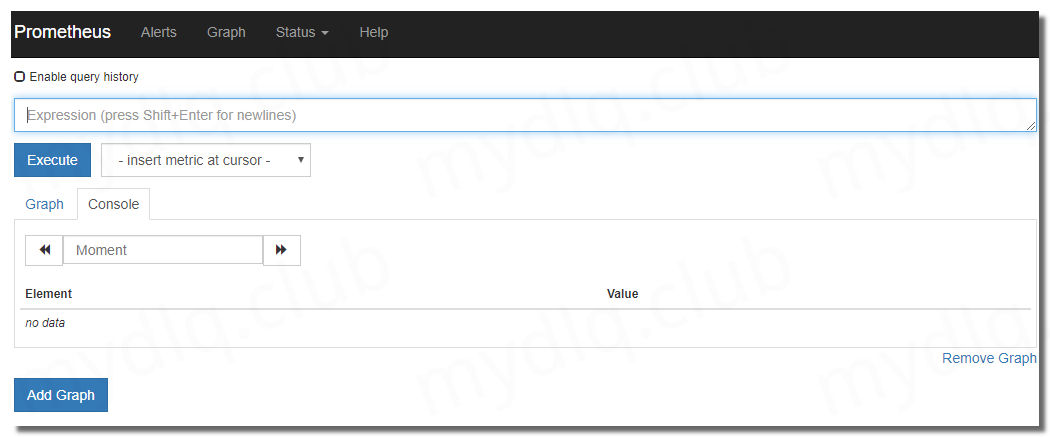

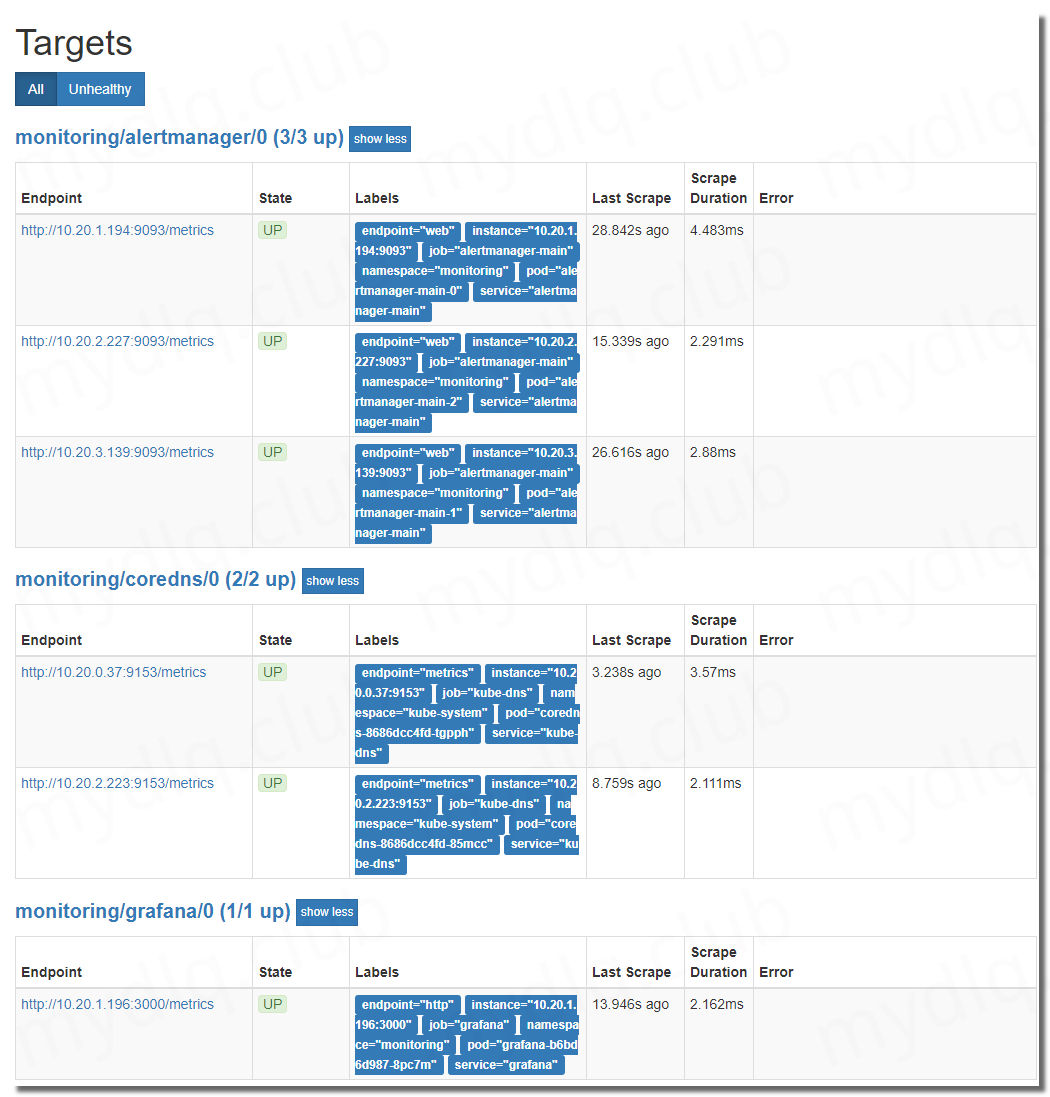

查看 Prometheus

打开地址: http://NodeIP:30089 查看 Prometheus 采集的目标,看其各个采集服务状态有木有错误

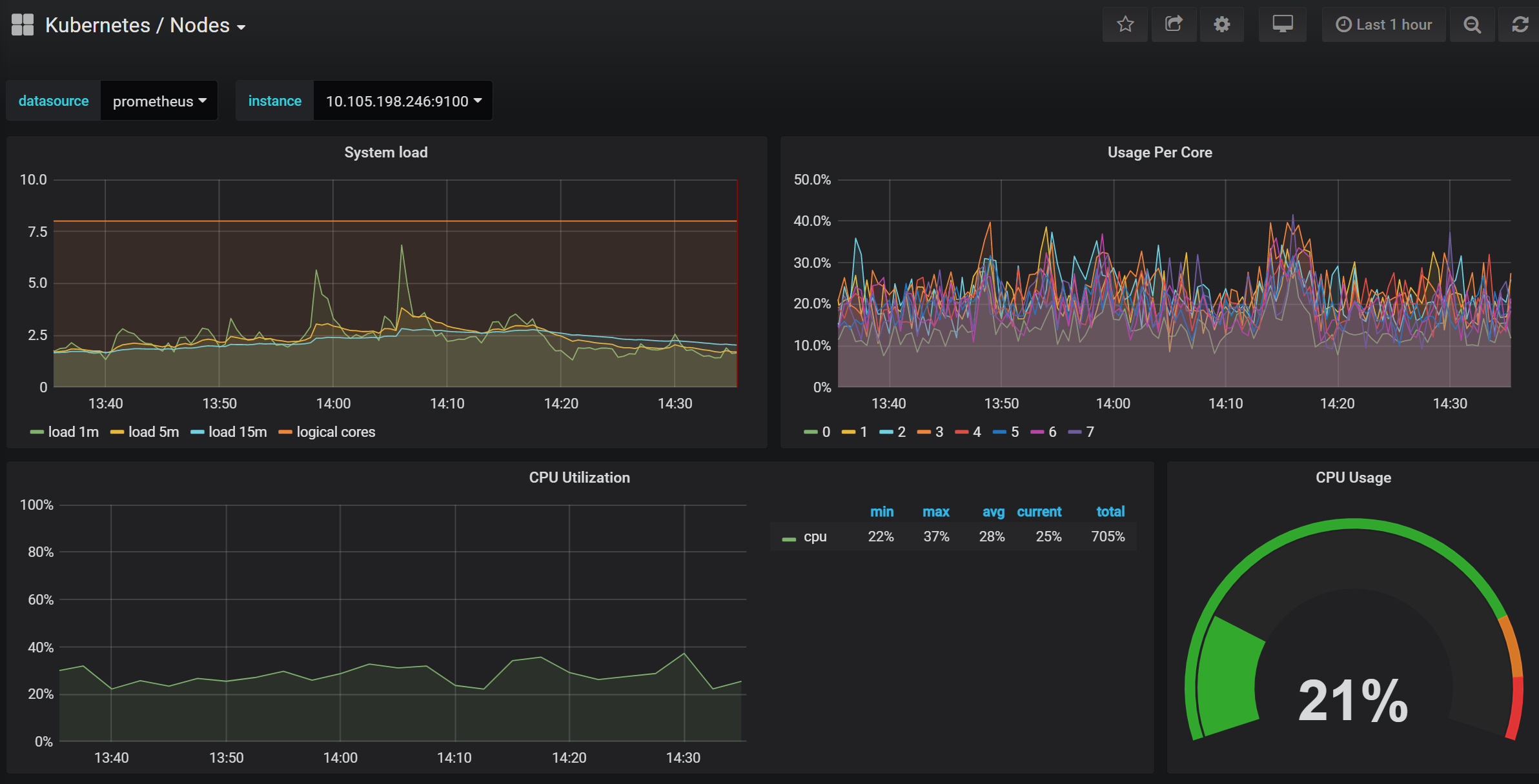

查看 Grafana

打开地址: http://NodeIP:30088 查看 Grafana 图表,看其 Kubernetes 集群是否能正常显示

- 默认用户名:admin

- 默认密码:admin

微信

微信

支付宝

支付宝