EFK收集kubernetes集群日志

环境说明

- k8s版本:1.20.0

- efk版本:7.6.2

kubernetes中大多数的Pod日志被输出到控制台,在宿主机的文件系统每个Pod会创建一个存放日志的文件夹/var/log/pods/这里会存放所有这个节点运行的Pod的日志,但是这个文件夹下一般都是软连接,由于k8s底层的CSI容器运行时可以使用很多所以日志本身并不存放在这个文件夹,以下为容器运行时真正存放日志目录

- containers log: /var/log/containers/*.log

- docker log: /var/lib/docker/*.log

- pod log:/var/log/pods

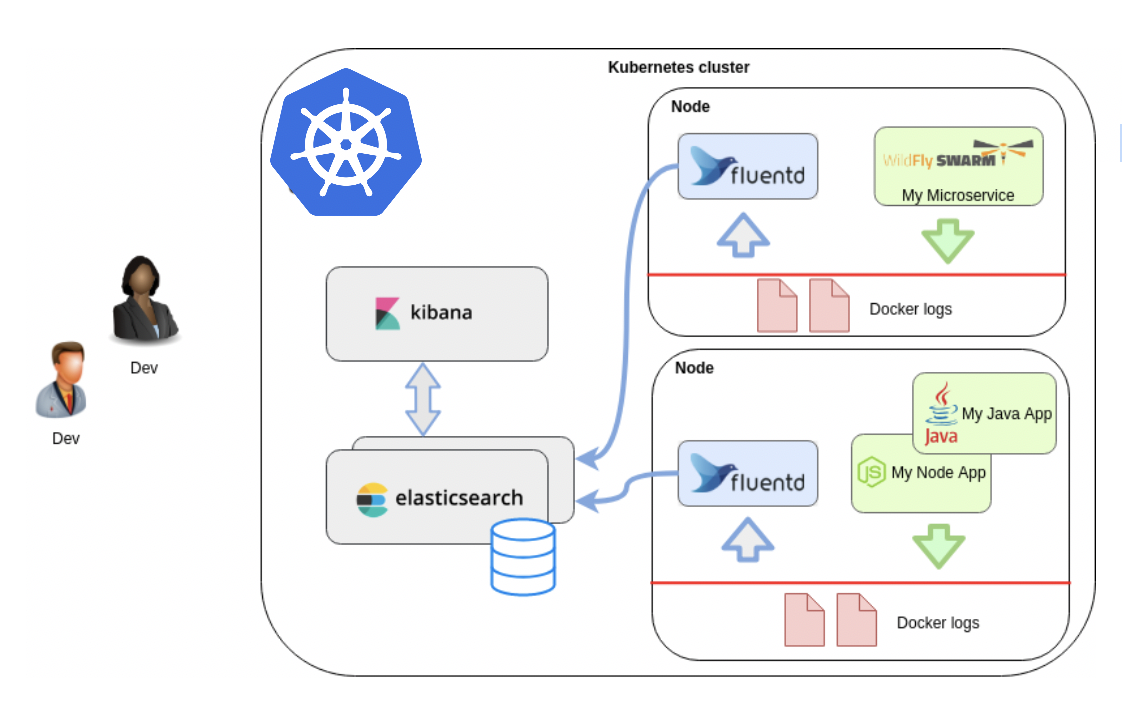

这些pod输出到控制台的日志直接使用daemonsets控制器在每个node节点运行一个fluentd容器进行统一的收集之后写入到日志存储(es)中在由Kibana进行展示查询,这种架构也成为EFK,架构如下

部署Elasticsearch 集群

[root@k8s01 efk]# vim elasticsearch.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es

namespace: logging

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

initContainers:

- name: increase-vm-max-map

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

imagePullPolicy: IfNotPresent

ports:

- name: rest

containerPort: 9200

- name: inter

containerPort: 9300

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: localtime

mountPath: /etc/localtime

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.initial_master_nodes

value: "es-0,es-1,es-2"

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: discovery.seed_hosts

value: "elasticsearch"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

- name: network.host

value: "0.0.0.0"

volumes:

- name: localtime

hostPath:

path: /etc/localtime

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 50Gi

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch-svc

namespace: logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

ports:

- port: 9200

name: rest

nodePort: 30920

- port: 9300

name: inter-node

nodePort: 30930

type: NodePort

[root@k8s01 efk]# kubectl apply -f elasticsearch.yaml

statefulset.apps/es created

service/elasticsearch created

service/elasticsearch-svc created

[root@k8s01 efk]# kubectl get -n logging po

NAME READY STATUS RESTARTS AGE

es-0 1/1 Running 0 2m59s

es-1 1/1 Running 0 2m53s

es-2 1/1 Running 0 2m47s

[root@k8s01 efk]# kubectl get -n logging svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 44s

elasticsearch-svc NodePort 10.98.224.35 <none> 9200:30920/TCP,9300:30930/TCP 44s

部署完成后,可以通过请求一个REST API来检查 Elasticsearch集群是否正常运行,使用下面的命令将本地端口9200转发到Elasticsearch节点(如es-0)对应的端口:

[root@k8s01 efk]# kubectl port-forward es-0 9200:9200 --namespace=logging

Forwarding from 127.0.0.1:9200 -> 9200

Handling connection for 9200

在另外的终端窗口中,执行如下请求:

[root@k8s01 ~]# curl http://localhost:9200/_cluster/state?pretty

{

"cluster_name" : "k8s-logs",

"cluster_uuid" : "IbxBYHMyQQO9nCR9w-NJcg",

"version" : 20,

"state_uuid" : "r6ug0GQpRdy3trB2xcFjtw",

"master_node" : "omW97bkMQaexJgBRCOeADg",

"blocks" : { },

"nodes" : {

"omW97bkMQaexJgBRCOeADg" : {

"name" : "es-0",

"ephemeral_id" : "9czxxr4vRaWuq1aFQ-WsTQ",

"transport_address" : "10.244.2.170:9300",

"attributes" : {

"ml.machine_memory" : "16246362112",

"xpack.installed" : "true",

"ml.max_open_jobs" : "20"

}

},

...

看到上面的信息就表明Elasticsearch集群成功创建了3个节点:es-0,es-1,和es-2,当前主节点是 es-0

部署Kibana

[root@k8s01 efk]# vim kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

ports:

- port: 5601

nodePort: 30561

type: NodePort

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.6.2

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch:9200

ports:

- containerPort: 5601

[root@k8s01 efk]# kubectl apply -f kibana.yaml

service/kibana created

deployment.apps/kibana created

[root@k8s01 efk]# kubectl get -n logging po

NAME READY STATUS RESTARTS AGE

es-0 1/1 Running 0 10m

es-1 1/1 Running 0 10m

es-2 1/1 Running 0 10m

kibana-7d49b9f974-5zddw 1/1 Running 0 42s

[root@k8s01 efk]# kubectl get -n logging svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 8m16s

elasticsearch-svc NodePort 10.98.224.35 <none> 9200:30920/TCP,9300:30930/TCP 8m16s

kibana NodePort 10.97.245.59 <none> 5601:30561/TCP 2m2s

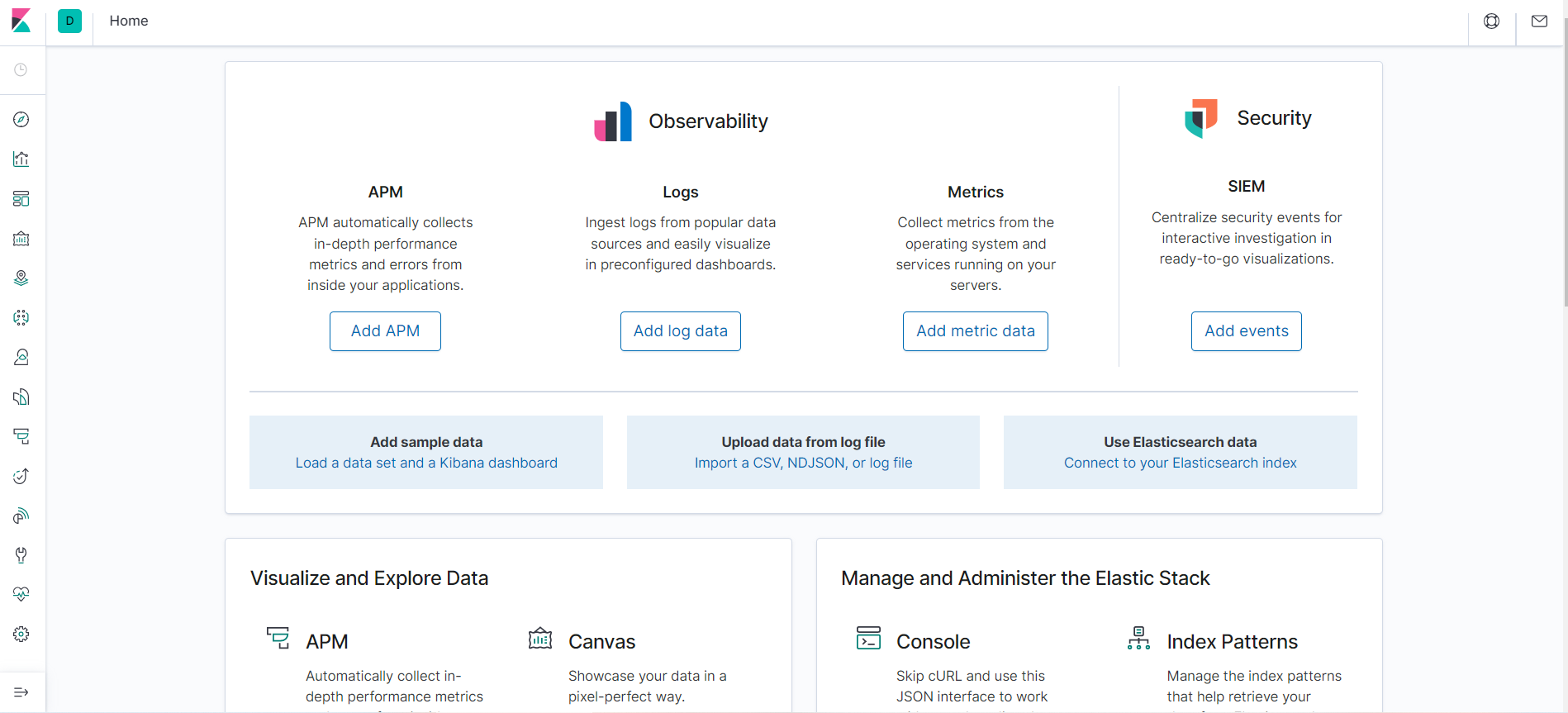

可以通过 NodePort 来访问 Kibana 这个服务,在浏览器中打开http://<任意节点IP>:30561即可

部署Fluentd

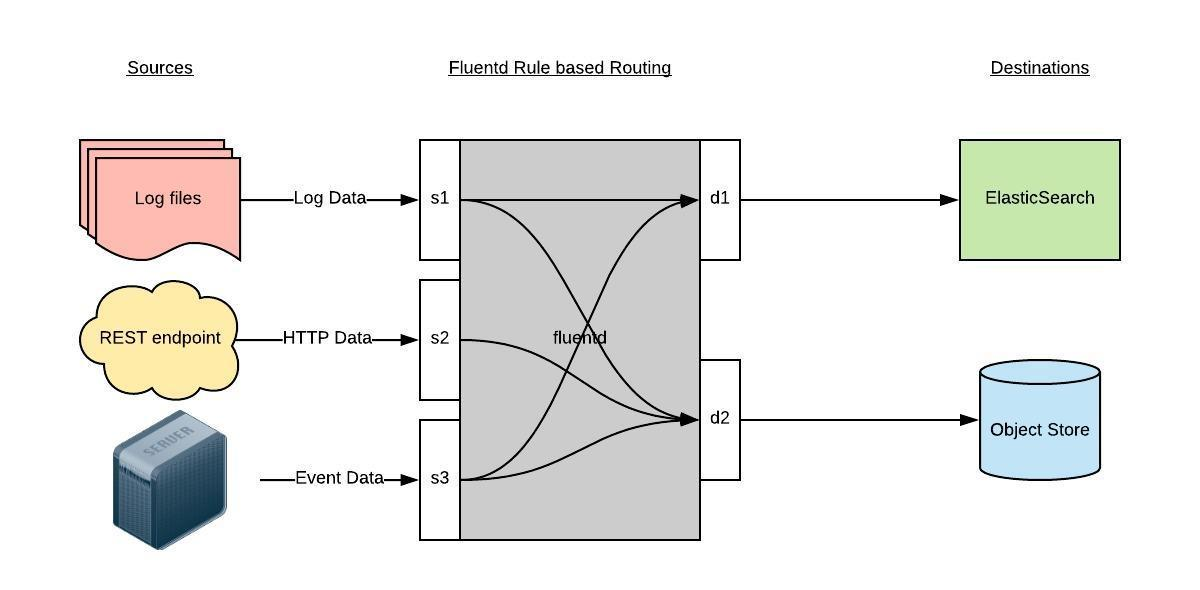

Fluentd是一个高效的日志聚合器,是用 Ruby 编写的,并且可以很好地扩展。Fluentd 足够高效并且消耗的资源相对较少,对比Fluent-bit更轻量级,占用资源更少,但是插件相对Fluentd来说不够丰富,所以整体来说,Fluentd 更加成熟,使用更加广泛,这里使用Fluentd来作为日志收集工具

工作原理

Fluentd 通过一组给定的数据源抓取日志数据,处理后(转换成结构化的数据格式)将它们转发给其他服务,比如 Elasticsearch、对象存储等等。Fluentd支持超过300个日志存储和分析服务,主要运行步骤如下:

- 首先Fluentd从多个日志源获取数据

- 结构化并且标记这些数据

- 然后根据匹配的标签将数据发送到多个目标服务去

创建ConfigMap

[root@k8s01 efk]# vim fluentd-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-config

namespace: logging

data:

system.conf: |-

<system>

root_dir /tmp/fluentd-buffers/

</system>

containers.input.conf: |-

<source>

@id fluentd-containers.log

@type tail #Fluentd 内置的输入方式,其原理是不停地从源文件中获取新的日志。

path /var/log/containers/*.log #挂载的服务器Docker容器日志地址

pos_file /var/log/es-containers.log.pos

tag raw.kubernetes.* #设置日志标签

read_from_head true

<parse> #多行格式化成JSON

@type multi_format #使用 multi-format-parser 解析器插件

<pattern>

format json #JSON解析器

time_key time #指定事件时间的时间字段

time_format %Y-%m-%dT%H:%M:%S.%NZ #时间格式

</pattern>

<pattern>

format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/

time_format %Y-%m-%dT%H:%M:%S.%N%:z

</pattern>

</parse>

</source>

#在日志输出中检测异常,并将其作为一条日志转发

#https://github.com/GoogleCloudPlatform/fluent-plugin-detect-exceptions

<match raw.kubernetes.**> #匹配tag为raw.kubernetes.**日志信息

@id raw.kubernetes

@type detect_exceptions #使用detect-exceptions插件处理异常栈信息

remove_tag_prefix raw #移除 raw 前缀

message log

stream stream

multiline_flush_interval 5

max_bytes 500000

max_lines 1000

</match>

<filter **> # 拼接日志

@id filter_concat

@type concat #Fluentd Filter 插件,用于连接多个事件中分隔的多行日志。

key message

multiline_end_regexp /\n$/ #以换行符“\n”拼接

#multiline_start_regexp /^[\[ ]*\d{1,4}[-\/ ]+\d{1,2}[-\/ ]+\d{1,4}[ T]+\d{1,2}:\d{1,2}:\d{1,2}/ #处理java日志多行报错的方式,任选其一

separator ""

</filter>

# 添加 Kubernetes metadata 数据

<filter kubernetes.**>

@id filter_kubernetes_metadata

@type kubernetes_metadata

</filter>

# 修复 ES 中的 JSON 字段

# 插件地址:https://github.com/repeatedly/fluent-plugin-multi-format-parser

<filter kubernetes.**>

@id filter_parser

@type parser #multi-format-parser多格式解析器插件

key_name log #在要解析的记录中指定字段名称。

reserve_data true #在解析结果中保留原始键值对。

remove_key_name_field true #key_name 解析成功后删除字段。

<parse>

@type multi_format

<pattern>

format json

</pattern>

<pattern>

format none

</pattern>

</parse>

</filter>

#删除一些多余的属性

<filter kubernetes.**>

@type record_transformer

remove_keys $.docker.container_id,$.kubernetes.container_image_id,$.kubernetes.pod_id,$.kubernetes.namespace_id,$.kubernetes.master_url,$.kubernetes.labels.pod-template-hash

</filter>

#只保留具有logging=true标签的Pod日志

<filter kubernetes.**>

@id filter_log

@type grep

<regexp>

key $.kubernetes.labels.logging

pattern ^true$

</regexp>

</filter>

######监听配置,一般用于日志聚合用######

forward.input.conf: |-

# 监听通过TCP发送的消息

<source>

@id forward

@type forward

</source>

output.conf: |-

<match **>

@id elasticsearch

@type elasticsearch

@log_level info

include_tag_key true

host elasticsearch

port 9200

logstash_format true

logstash_prefix k8s #设置index前缀为k8s

request_timeout 30s

<buffer>

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 8

overflow_action block

</buffer>

</match>

[root@k8s01 efk]# kubectl apply -f fluentd-configmap.yaml

configmap/fluentd-config created

创建部署fluentd

[root@k8s01 efk]# vim fluentd-ds.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: logging

labels:

k8s-app: fluentd

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

labels:

k8s-app: fluentd

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

labels:

k8s-app: fluentd

subjects:

- kind: ServiceAccount

name: fluentd

namespace: logging

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: logging

labels:

k8s-app: fluentd

spec:

selector:

matchLabels:

k8s-app: fluentd

template:

metadata:

labels:

k8s-app: fluentd

annotations:

scheduler.alpha.kubernetes.io/critical-pod: '' #确保如果节点被驱逐,fluentd不会被驱逐,支持关键的基于pod注释的优先级方案

spec:

serviceAccountName: fluentd

containers:

- name: fluentd

image: quay.io/fluentd_elasticsearch/fluentd:v3.0.1

imagePullPolicy: IfNotPresent

env:

- name: FLUENTD_ARGS

value: --no-supervisor -q

resources:

limits:

memory: 500Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: config-volume

mountPath: /etc/fluent/config.d

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: config-volume

configMap:

name: fluentd-config

[root@k8s01 efk]# kubectl apply -f fluentd-ds.yaml

serviceaccount/fluentd created

clusterrole.rbac.authorization.k8s.io/fluentd created

clusterrolebinding.rbac.authorization.k8s.io/fluentd created

daemonset.apps/fluentd created

Fluentd 启动成功后,这个时候就可以发送日志到 ES 了,但是这里过滤了只采集具有logging=true标签的Pod日志,所以现在还没有任何数据会被采集

下面部署应用模拟日志收集

[root@k8s01 efk]# vim test-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: oms-center

spec:

replicas: 1

selector:

matchLabels:

app: oms-center

template:

metadata:

labels:

app: oms-center

logging: "true" # 要采集日志需要加上该标签

spec:

containers:

- name: oms-center

image: oms-center

[root@k8s01 efk]# kubectl apply -f test-deploy.yaml

deployment.apps/oms-center created

[root@k8s01 efk]# kubectl get po

NAME READY STATUS RESTARTS AGE

oms-center-746c794bf6-n45h9 1/1 Running 0 44s

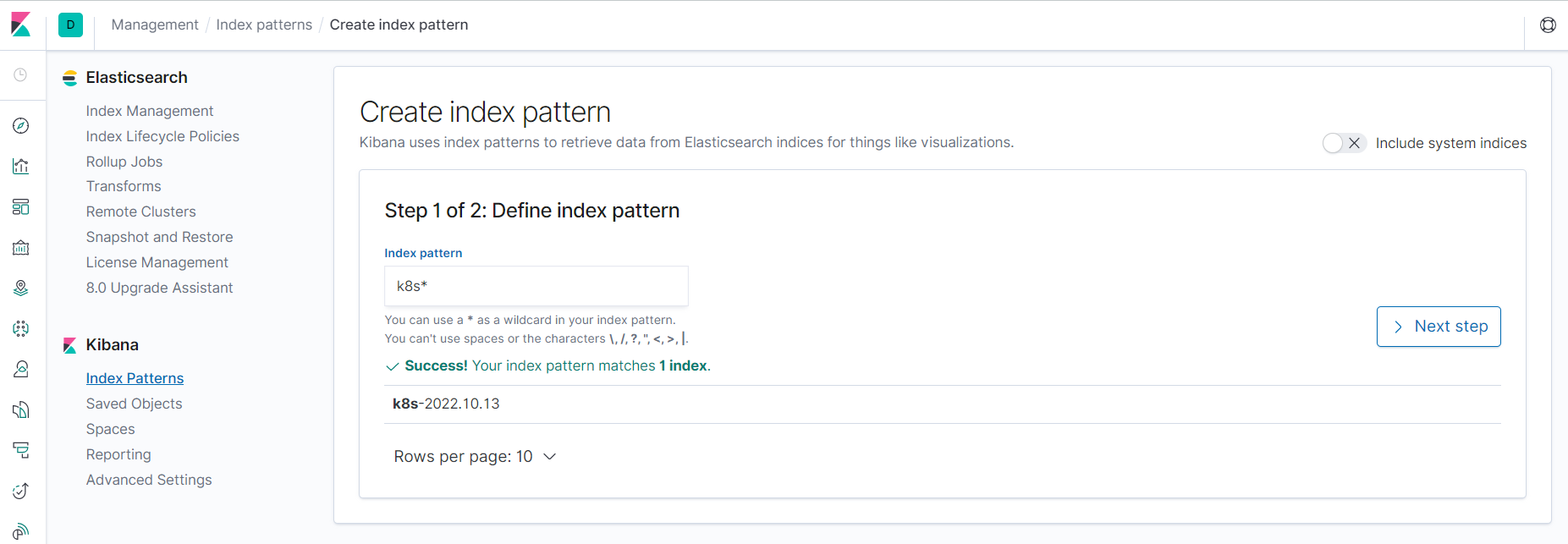

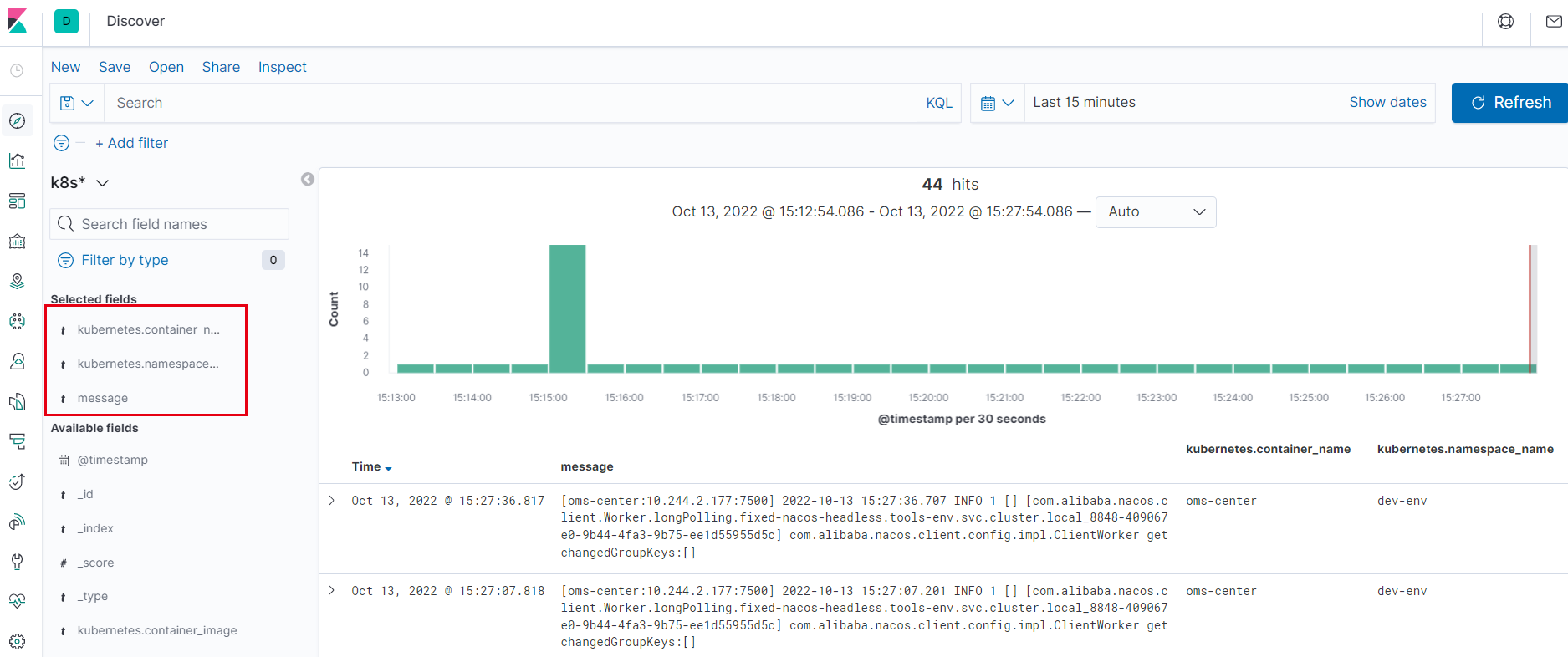

可以根据字段来匹配想看的消息

微信

微信

支付宝

支付宝