k8s部署redis高可用集群

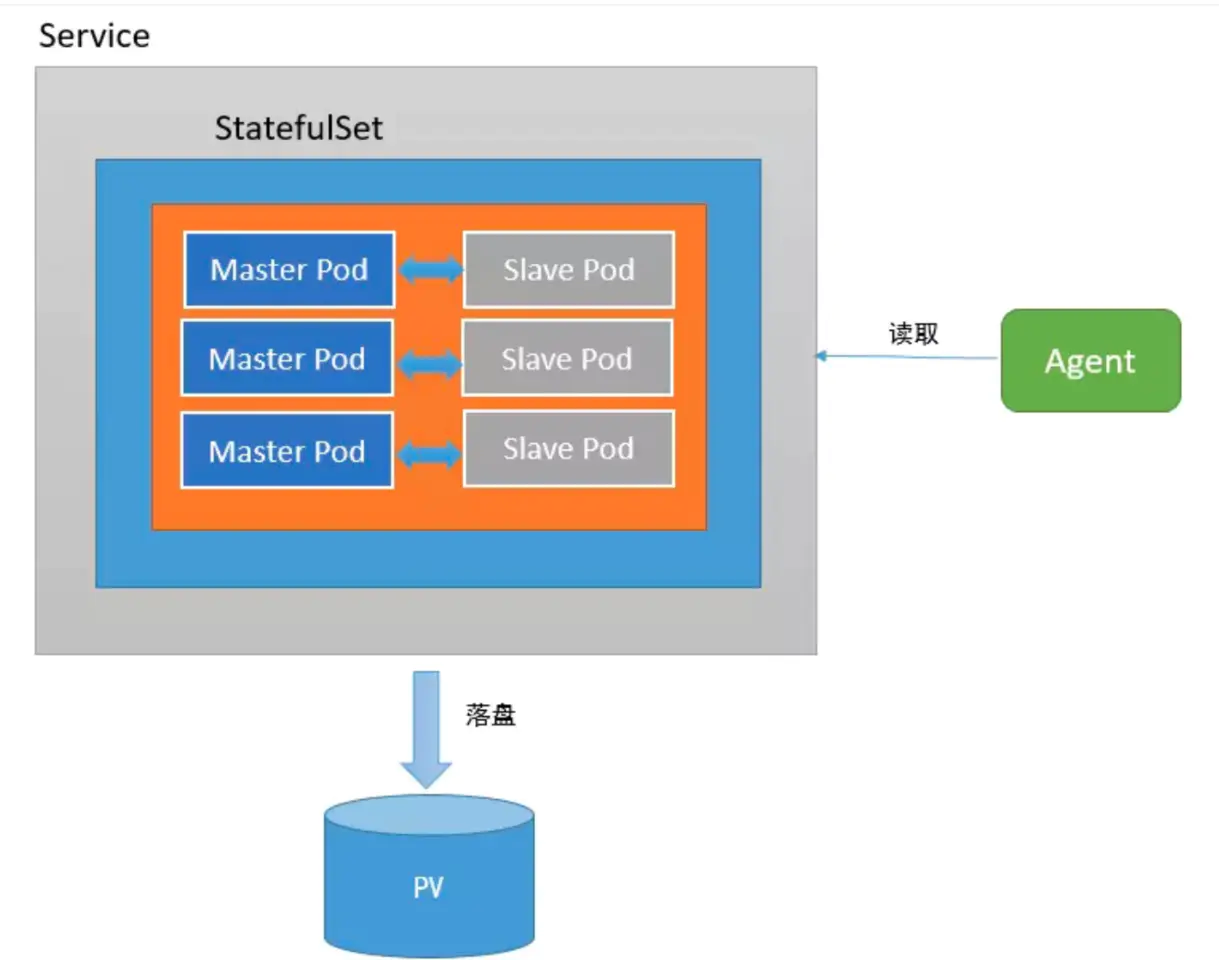

k8s上部署一个redis集群和部署一个普通应用没有什么太大的区别,但需要注意下面几个问题: REDIS是一个有状态应用 部署redis集群时我们需要注意的问题,当我们把redis以pod的形式部署在k8s中时,每个pod里缓存的数据都是不一样的,而且pod的IP是会随时变化,这时候如果使用普通的deployment和service来部署redis-cluster就会出现很多问题,因此需要改用StatefulSet + Headless Service来解决 数据持久化 redis虽然是基于内存的缓存,但还是需要依赖于磁盘进行数据的持久化,以便服务出现问题重启时可以恢复已经缓存的数据,在集群中需要使用共享文件系统 + PV(持久卷)的方式来让整个集群中的所有pod都可以共享同一份持久化储存

概念介绍

Headless Service

简单的说,Headless Service就是没有指定Cluster IP的Service,相应的,在k8s的dns映射里,Headless Service的解析结果不是一个Cluster IP,而是它所关联的所有Pod的IP列表

StatefulSet

参考介绍 StatefulSet是k8s中专门用于解决有状态应用部署的一种资源,总的来说可以认为它是Deployment/RC的一个变种,它有以下几个特性:

- StatefulSet管理的每个Pod都有唯一的文档/网络标识,并且按照数字规律生成,而不是像Deployment中那样名称和IP都是随机的(比如StatefulSet名字为redis,那么pod名就是redis-0, redis-1 ...)

- StatefulSet中ReplicaSet的启停顺序是严格受控的,操作第N个pod一定要等前N-1个执行完才可以

- StatefulSet中的Pod采用稳定的持久化储存,并且对应的PV不会随着Pod的删除而被销毁 另外需要说明的是,StatefulSet必须要配合Headless Service使用,它会在Headless Service提供的DNS映射上再加一层,最终形成精确到每个pod的域名映射,格式如下:

$(podname).$(headless service name)

有了这个映射,就可以在配置集群时使用域名替代IP,实现有状态应用集群的管理

集群架构

配置步骤大概罗列如下:

- 配置共享文件系统NFS

- 创建PV和PVC

- 创建ConfigMap

- 创建Headless Service

- 创建StatefulSet

- 初始化redis集群

部署redis集群

创建ConfigMap

[root@k8s01 redis-cluster]# cat redis.conf

appendonly yes #开启Redis的AOF持久化

cluster-enabled yes #集群模式打开

cluster-config-file /var/lib/redis/nodes.conf #下面说明

cluster-node-timeout 5000 #节点超时时间

dir /var/lib/redis #AOF持久化文件存在的位置

port 6379 #开启的端口

[root@k8s01 redis-cluster]# kubectl create cm redis-conf --from-file=redis.conf -n tool-env

configmap/redis-conf created

创建HeadlessService

[root@k8s01 redis-cluster]# cat redis-headless.yaml

apiVersion: v1

kind: Service

metadata:

name: redis-svc

namespace: tool-env

labels:

app: redis

spec:

ports:

- name: redis-port

port: 6379

clusterIP: None

selector:

app: redis

appCluster: redis-cluster

[root@k8s01 redis-cluster]# kubectl apply -f redis-headless.yaml

service/redis-svc created

创建StatefulSet

[root@k8s01 redis-cluster]# cat redis.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis

namespace: tool-env

spec:

serviceName: "redis-svc"

replicas: 6

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

appCluster: redis-cluster

spec:

terminationGracePeriodSeconds: 20

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- redis

topologyKey: kubernetes.io/hostname

containers:

- name: redis

image: redis:5.0

command:

- "redis-server" #redis启动命令

args:

- "/etc/redis/redis.conf" #redis-server后面跟的参数,换行代表空格

- "--protected-mode" #允许外网访问

- "no"

# command: redis-server /etc/redis/redis.conf --protected-mode no

resources: #资源

requests: #请求的资源

cpu: "100m" #m代表千分之,相当于0.1 个cpu资源

memory: "100Mi" #内存100m大小

ports:

- name: redis

containerPort: 6379

protocol: "TCP"

- name: cluster

containerPort: 16379

protocol: "TCP"

volumeMounts:

- name: "redis-conf" #挂载configmap生成的文件

mountPath: "/etc/redis" #挂载到哪个路径下

- name: "redis-data" #挂载持久卷的路径

mountPath: "/var/lib/redis"

volumes:

- name: "redis-conf" #引用configMap卷

configMap:

name: "redis-conf"

items:

- key: "redis.conf" #创建configMap指定的名称

path: "redis.conf" #里面的那个文件--from-file参数后面的文件

volumeClaimTemplates:

- metadata:

name: redis-data

labels:

app: redis

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 200M

[root@k8s01 redis-cluster]# kubectl apply -f redis.yaml

statefulset/redis created

[root@k8s01 redis-cluster]# kubectl get -n tool-env po|grep redis

redis-0 1/1 Running 0 21h

redis-1 1/1 Running 0 21h

redis-2 1/1 Running 0 21h

redis-3 1/1 Running 0 9h

redis-4 1/1 Running 0 9h

redis-5 1/1 Running 0 9h

初始化redis集群

StatefulSet创建完毕后,可以看到6个pod已经启动了,但这时候整个redis集群还没有初始化,需要使用官方提供的redis-trib工具,当然可以在任意一个redis节点上运行对应的工具来初始化整个集群,但这么做显然有些不太合适,我们希望每个节点的职责尽可能地单一,所以最好单独起一个pod来运行整个集群的管理工具,在这里需要先介绍一下redis-trib,它是官方提供的redis-cluster管理工具,可以实现redis集群的创建、更新等功能,在早期的redis版本中,它是以源码包里redis-trib.rb这个ruby脚本的方式来运作的(pip上也可以拉到python版本,但我运行失败),现在(使用的5.0.3)已经被官方集成进redis-cli中 开始初始化集群,首先在k8s上创建一个ubuntu的pod,用来作为管理节点

[root@k8s01 redis-cluster]# kubectl run -i -n tool-env --tty redis-cluster-manager --image=centos:7 --restart=Never /bin/bash

If you don't see a command prompt, try pressing enter.

#安装一些基本工具wget,dnsutils

[root@redis-cluster-manager /]# yum install bind-utils wget make gcc gcc-c++ glibc -y

[root@redis-cluster-manager /]# wget http://download.redis.io/releases/redis-5.0.3.tar.gz

[root@redis-cluster-manager /]# tar xf redis-5.0.3.tar.gz

[root@redis-cluster-manager /]# cd redis-5.0.3

[root@redis-cluster-manager redis-5.0.3]# make

#编译完毕后redis-cli会被放置在src目录下,把它放进/usr/local/bin中方便后续操作

[root@redis-cluster-manager redis-5.0.3]# mv src/redis-cli /usr/local/bin/

接下来要获取已经创建好的6个节点的host ip,可以通过nslookup结合StatefulSet的域名规则来查找,举个例子,要查找redis-app-0这个pod的ip,运行如下命令:

[root@redis-cluster-manager ~]# nslookup redis-0.redis-svc

Server: 10.96.0.10

Address: 10.96.0.10#53

Name: redis-0.redis-svc.tool-env.svc.cluster.local

Address: 10.244.1.52

10.244.1.52就是对应的ip,这次部署我们使用0,1,2作为Master节点;3,4,5作为Slave节点,先运行下面的命令来初始化集群的Master节点

[root@redis-cluster-manager ~]# redis-cli --cluster create `dig +short redis-0.redis-svc.tool-env.svc.cluster.local`:6379 `dig +short redis-1.redis-svc.tool-env.svc.cluster.local`:6379 `dig +short redis-2.redis-svc.tool-env.svc.cluster.local`:6379

>>> Performing hash slots allocation on 3 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

M: 9589b94f76e3727cae09be37b68609ca61a6a8ee 10.244.1.52:6379

slots:[0-5460] (5461 slots) master

M: 9e95a53741ab1a18e50b3554e27837513c0f0e2a 10.244.0.26:6379

slots:[5461-10922] (5462 slots) master

M: 279989019bd0c57140656c82d0ce90d601e37b42 10.244.2.31:6379

slots:[10923-16383] (5461 slots) master

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

..

>>> Performing Cluster Check (using node 10.244.1.52:6379)

M: 9589b94f76e3727cae09be37b68609ca61a6a8ee 10.244.1.52:6379

slots:[0-5460] (5461 slots) master

M: 279989019bd0c57140656c82d0ce90d601e37b42 10.244.2.31:6379

slots:[10923-16383] (5461 slots) master

M: 9e95a53741ab1a18e50b3554e27837513c0f0e2a 10.244.0.26:6379

slots:[5461-10922] (5462 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

命令dig +short redis-0.redis-svc.tool-env.svc.cluster.local用于将Pod的域名转化为IP,这是因为redis-trib不支持域名来创建集群 然后给他们分别附加对应的Slave节点,这里的cluster-master-id在上一步创建的时候会给出

[root@centos ~]# redis-cli --cluster add-node `dig +short redis-3.redis-svc.tool-env.svc.cluster.local`:6379 `dig +short redis-0.redis-svc.tool-env.svc.cluster.local`:6379 --cluster-slave --cluster-master-id 9589b94f76e3727cae09be37b68609ca61a6a8ee

>>> Adding node 10.244.1.57:6379 to cluster 10.244.1.52:6379

>>> Performing Cluster Check (using node 10.244.1.52:6379)

M: 9589b94f76e3727cae09be37b68609ca61a6a8ee 10.244.1.52:6379

slots:[0-5460] (5461 slots) master

M: 279989019bd0c57140656c82d0ce90d601e37b42 10.244.2.31:6379

slots:[10923-16383] (5461 slots) master

M: 9e95a53741ab1a18e50b3554e27837513c0f0e2a 10.244.0.26:6379

slots:[5461-10922] (5462 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.244.1.57:6379 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 10.244.1.52:6379.

[OK] New node added correctly.

[root@centos ~]# redis-cli --cluster add-node `dig +short redis-4.redis-svc.tool-env.svc.cluster.local`:6379 `dig +short redis-1.redis-svc.tool-env.svc.cluster.local`:6379 --cluster-slave --cluster-master-id 279989019bd0c57140656c82d0ce90d601e37b42

>>> Adding node 10.244.0.27:6379 to cluster 10.244.0.26:6379

>>> Performing Cluster Check (using node 10.244.0.26:6379)

M: 9e95a53741ab1a18e50b3554e27837513c0f0e2a 10.244.0.26:6379

slots:[5461-10922] (5462 slots) master

S: 42234b7eb49ac16dd72aa30489a57efac8409edd 10.244.1.57:6379

slots: (0 slots) slave

replicates 9589b94f76e3727cae09be37b68609ca61a6a8ee

M: 9589b94f76e3727cae09be37b68609ca61a6a8ee 10.244.1.52:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 279989019bd0c57140656c82d0ce90d601e37b42 10.244.2.31:6379

slots:[10923-16383] (5461 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.244.0.27:6379 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 10.244.2.31:6379.

[OK] New node added correctly.

[root@centos ~]# redis-cli --cluster add-node `dig +short redis-5.redis-svc.tool-env.svc.cluster.local`:6379 `dig +short redis-2.redis-svc.tool-env.svc.cluster.local`:6379 --cluster-slave --cluster-master-id 9e95a53741ab1a18e50b3554e27837513c0f0e2a

>>> Adding node 10.244.2.32:6379 to cluster 10.244.2.31:6379

>>> Performing Cluster Check (using node 10.244.2.31:6379)

M: 279989019bd0c57140656c82d0ce90d601e37b42 10.244.2.31:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 9589b94f76e3727cae09be37b68609ca61a6a8ee 10.244.1.52:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 9e95a53741ab1a18e50b3554e27837513c0f0e2a 10.244.0.26:6379

slots:[5461-10922] (5462 slots) master

S: 42234b7eb49ac16dd72aa30489a57efac8409edd 10.244.1.57:6379

slots: (0 slots) slave

replicates 9589b94f76e3727cae09be37b68609ca61a6a8ee

S: 801f08939958d8fc23c4f4097219bd2a428b0ff9 10.244.0.27:6379

slots: (0 slots) slave

replicates 279989019bd0c57140656c82d0ce90d601e37b42

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.244.2.32:6379 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 10.244.0.26:6379.

[OK] New node added correctly.

集群初始化后,随意进入一个节点检查一下集群信息

[root@redis-cluster-manager ~]# redis-cli -h 10.244.1.52 -c

10.244.1.52:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:3

cluster_my_epoch:1

cluster_stats_messages_ping_sent:63627

cluster_stats_messages_pong_sent:63556

cluster_stats_messages_sent:127183

cluster_stats_messages_ping_received:63551

cluster_stats_messages_pong_received:63627

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:127183

10.244.1.52:6379> cluster nodes

3368319dd4aa99fd867301f61e74c595fc7eaab0 10.244.2.32:6379@16379 slave 9e95a53741ab1a18e50b3554e27837513c0f0e2a 0 1596373134582 2 connected

42234b7eb49ac16dd72aa30489a57efac8409edd 10.244.1.57:6379@16379 slave 9589b94f76e3727cae09be37b68609ca61a6a8ee 0 1596373134000 1 connected

801f08939958d8fc23c4f4097219bd2a428b0ff9 10.244.0.27:6379@16379 slave 279989019bd0c57140656c82d0ce90d601e37b42 0 1596373133580 3 connected

9589b94f76e3727cae09be37b68609ca61a6a8ee 10.244.1.52:6379@16379 myself,master - 0 1596373132000 1 connected 0-5460

279989019bd0c57140656c82d0ce90d601e37b42 10.244.2.31:6379@16379 master - 0 1596373133580 3 connected 10923-16383

9e95a53741ab1a18e50b3554e27837513c0f0e2a 10.244.0.26:6379@16379 master - 0 1596373134782 2 connected 5461-10922

至此,集群初始化完毕,我们进入一个节点来试试,注意在集群模式下redis-cli必须加上-c参数才能够访问其他节点上的数据:

10.244.1.52:6379> set a 'bbb'

-> Redirected to slot [15495] located at 10.244.2.31:6379

OK

10.244.2.31:6379> exit

[root@redis-cluster-manager ~]# redis-cli -h 10.244.0.27 -c

10.244.0.27:6379> get a

-> Redirected to slot [15495] located at 10.244.2.31:6379

"bbb"

创建Service

现在进入redis集群中的任意一个节点都可以直接进行操作了,但是为了能够对集群其他的服务提供访问,还需要建立一个service来实现服务发现和负载均衡(注意这里的service和我们之前创建的headless service不是一个东西)

[root@k8s01 redis-cluster]# cat redis-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: redis-client

namespace: tool-env

labels:

app: redis

spec:

ports:

- name: redis-port

protocol: "TCP"

port: 6379

targetPort: 6379

nodePort: 30639

selector:

app: redis

appCluster: redis-cluster

type: NodePort

[root@k8s01 redis-cluster]# kubectl apply -f redis-svc.yaml

service/redis-client created

微信

微信

支付宝

支付宝