k8s部署SkyWalking8.9.0

系统环境

k8s版本:1.20.11

skywalking版本:8.9.0

elasticsearch版本:7.6.2

由于之前部署过6.1.0的版本,现在skywalking已经更新到9.x,改动的地方有点多,详情可以查看官网介绍,本文就不在过多描述

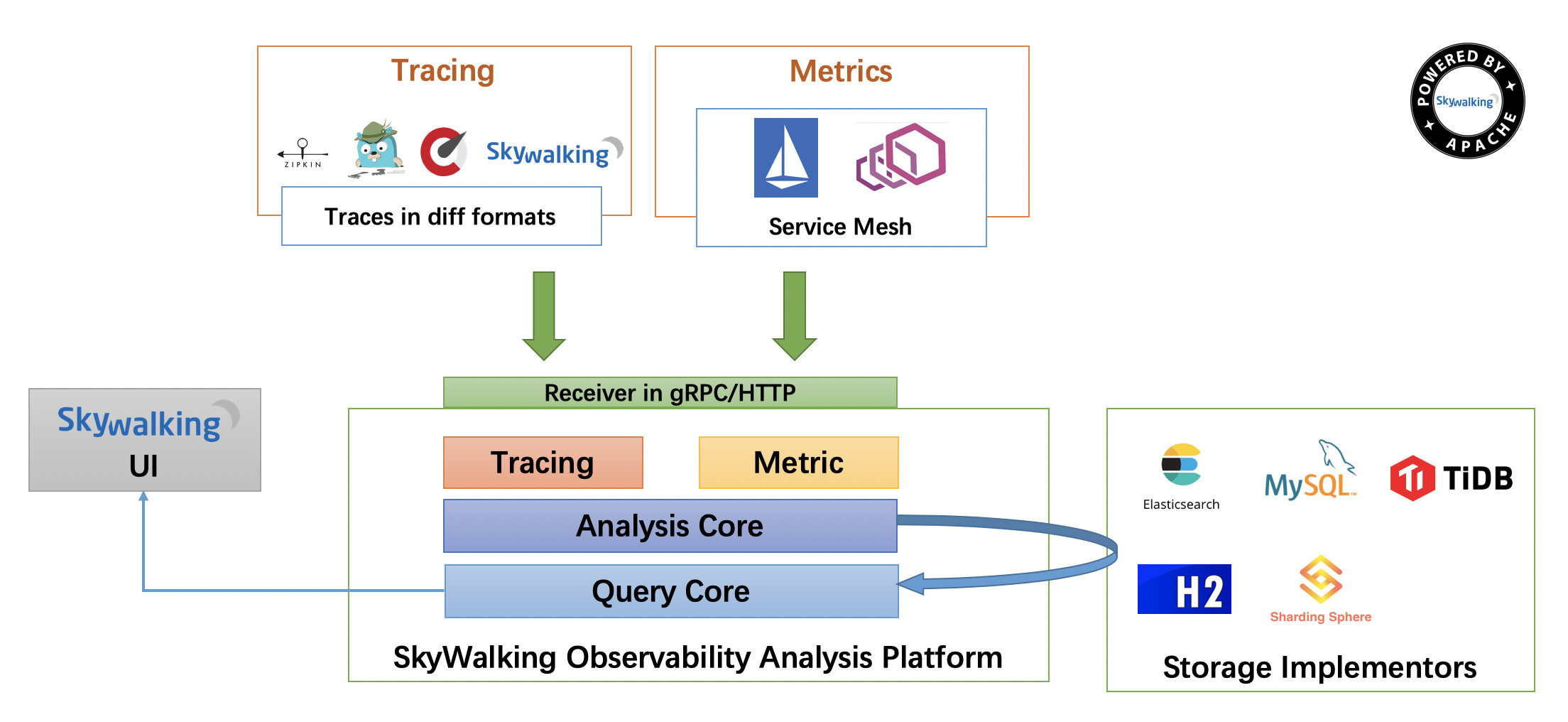

架构

整个架构,分成上、下、左、右四部分:

上部分 Agent :负责从应用中,收集链路信息,发送给 SkyWalking OAP 服务器。目前支持 SkyWalking、Zikpin、Jaeger 等提供的 Tracing 数据信息。而我们目前采用的是,SkyWalking Agent 收集 SkyWalking Tracing 数据,传递给服务器

下部分 SkyWalking OAP :负责接收 Agent 发送的 Tracing 数据信息,然后进行分析(Analysis Core) ,存储到外部存储器( Storage ),最终提供查询( Query )功能

右部分 Storage :Tracing 数据存储。目前支持 ES、MySQL、Sharding Sphere、TiDB、H2 多种存储器。而我们目前采用的是 ES ,主要考虑是 SkyWalking 开发团队自己的生产环境采用 ES 为主

左部分 SkyWalking UI :负责提供控台,查看链路等等

部署SkyWalking

Skywalking持久化跟踪数据默认使用的是H2,重启后数据就会丢失,本文将演示持久化到ES,生产请根据实际情况选择

部署ES

[root@k8s01 skywalking]# cat es-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es

namespace: skywalking

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

imagePullSecrets:

- name: harborsecret

initContainers:

- name: increase-vm-max-map

image: busybox:latest

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox:latest

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

ports:

- name: rest

containerPort: 9200

- name: inter

containerPort: 9300

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.initial_master_nodes

value: "es-0,es-1,es-2"

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: discovery.seed_hosts

value: "elasticsearch"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

- name: network.host

value: "0.0.0.0"

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 100Gi

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: skywalking

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch-client

namespace: skywalking

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

[root@k8s01 skywalking]# kubectl apply -f es-statefulset.yaml 部署RBAC

[root@k8s01 skywalking]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: skywalking

name: skywalking-oap

namespace: skywalking

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: skywalking

namespace: skywalking

labels:

app: skywalking

rules:

- apiGroups: [""]

resources: ["pods", "endpoints", "services", "nodes"]

verbs: ["get", "watch", "list"]

- apiGroups: ["extensions"]

resources: ["deployments", "replicasets"]

verbs: ["get", "watch", "list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: skywalking

namespace: skywalking

labels:

app: skywalking

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: skywalking

subjects:

- kind: ServiceAccount

name: skywalking-oap

namespace: skywalking

[root@k8s01 skywalking]# kubectl apply -f rbac.yaml

serviceaccount/skywalking-oap created

clusterrole.rbac.authorization.k8s.io/skywalking created

clusterrolebinding.rbac.authorization.k8s.io/skywalking created部署数据初始化Job

[root@k8s01 skywalking]# cat job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: "skywalking-es-init"

namespace: skywalking

labels:

app: skywalking-job

spec:

template:

metadata:

name: "skywalking-es-init"

labels:

app: skywalking-job

spec:

serviceAccountName: skywalking-oap

restartPolicy: Never

initContainers:

- name: wait-for-elasticsearch

image: busybox:1.30

imagePullPolicy: IfNotPresent

command: ['sh', '-c', 'for i in $(seq 1 60); do nc -z -w3 elasticsearch 9200 && exit 0 || sleep 5; done; exit 1']

containers:

- name: oap

image: skywalking.docker.scarf.sh/apache/skywalking-oap-server:8.9.0

imagePullPolicy: IfNotPresent

env:

- name: JAVA_OPTS

value: "-Xmx2g -Xms2g -Dmode=init"

- name: SW_STORAGE

value: elasticsearch

- name: SW_STORAGE_ES_CLUSTER_NODES

value: "elasticsearch:9200"

volumeMounts:

volumes:

[root@k8s01 skywalking]# kubectl apply -f job.yaml

job.batch/skywalking-es-init created部署OAP

[root@k8s01 skywalking]# cat oap.yaml

---

apiVersion: v1

kind: Service

metadata:

name: oap-svc

namespace: skywalking

labels:

app: oap

spec:

type: ClusterIP

ports:

- port: 11800

name: grpc

- port: 12800

name: rest

selector:

app: oap

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: oap

name: oap

namespace: skywalking

spec:

replicas: 1

selector:

matchLabels:

app: oap

template:

metadata:

labels:

app: oap

spec:

serviceAccountName: skywalking-oap

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

podAffinityTerm:

topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

app: "skywalking"

initContainers:

- name: wait-for-elasticsearch

image: busybox:1.30

imagePullPolicy: IfNotPresent

command: ['sh', '-c', 'for i in $(seq 1 60); do nc -z -w3 elasticsearch 9200 && exit 0 || sleep 5; done; exit 1']

containers:

- name: oap

image: skywalking.docker.scarf.sh/apache/skywalking-oap-server:8.9.0

imagePullPolicy: IfNotPresent

livenessProbe:

tcpSocket:

port: 12800

initialDelaySeconds: 15

periodSeconds: 20

readinessProbe:

tcpSocket:

port: 12800

initialDelaySeconds: 15

periodSeconds: 20

ports:

- containerPort: 11800

name: grpc

- containerPort: 12800

name: rest

env:

- name: JAVA_OPTS

value: "-Dmode=no-init -Xmx2g -Xms2g"

- name: SW_CLUSTER

value: kubernetes

- name: SW_CLUSTER_K8S_NAMESPACE

value: "default"

- name: SW_CLUSTER_K8S_LABEL

value: "app=skywalking,release=skywalking,component=oap"

# 记录数据

- name: SW_CORE_RECORD_DATA_TTL

value: "2"

# Metrics数据

- name: SW_CORE_METRICS_DATA_TTL

value: "2"

- name: SKYWALKING_COLLECTOR_UID

valueFrom:

fieldRef:

fieldPath: metadata.uid

- name: SW_STORAGE

value: elasticsearch

- name: SW_STORAGE_ES_CLUSTER_NODES

value: "elasticsearch:9200"

[root@k8s01 skywalking]# kubectl apply -f oap.yaml

service/oap-svc created

deployment.apps/oap created 部署UI

[root@k8s01 skywalking]# cat ui.yaml

---

apiVersion: v1

kind: Service

metadata:

labels:

app: ui

name: ui-svc

namespace: skywalking

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 8080

protocol: TCP

selector:

app: ui

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ui

namespace: skywalking

labels:

app: ui

spec:

replicas: 1

selector:

matchLabels:

app: ui

template:

metadata:

labels:

app: ui

spec:

affinity:

containers:

- name: ui

image: skywalking.docker.scarf.sh/apache/skywalking-ui:8.9.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: page

env:

- name: SW_OAP_ADDRESS

value: http://oap-svc:12800 #根据oap的svc一致

[root@k8s01 skywalking]# kubectl apply -f ui.yaml

service/ui-svc created

deployment.apps/ui created 查看服务

[root@k8s01 skywalking]# kubectl get -n skywalking po

NAME READY STATUS RESTARTS AGE

es-0 1/1 Running 0 61m

es-1 1/1 Running 0 61m

es-2 1/1 Running 0 61m

oap-ddffcff58-rx85j 1/1 Running 0 55m

skywalking-es-init-vtknp 0/1 Completed 0 59m

ui-c5c5fd8b4-26vtt 1/1 Running 0 54m访问系统

测试部署项目接入agent

制作agent镜像

根据你后端语言可以选择不同的agent进行下载,下载地址,本文使用java agent

下载java-agent

[root@tools agent]# wget https://archive.apache.org/dist/skywalking/java-agent/8.10.0/apache-skywalking-java-agent-8.10.0.tgz

[root@tools agent]# tar xf apache-skywalking-java-agent-8.10.0.tgz

[root@tools agent]# ls

apache-skywalking-java-agent-8.10.0.tgz skywalking-agent调用链忽略(取消跟踪)

[root@tools agent]# cd skywalking-agent

[root@tools skywalking-agent]# cp optional-plugins/apm-trace-ignore-plugin-8.10.0.jar plugins/

[root@tools skywalking-agent]# vim config/apm-trace-ignore-plugin.config

trace.ignore_path=${SW_AGENT_TRACE_IGNORE_PATH:GET:/actuator/**,Redisson/**,Mysql/**,HikariCP/**,Lettuce/**,/xxl-job/**,UndertowDispatch/**}Dockerfile镜像

[root@tools agent]# vim Dockerfile

FROM alpine:3

# 时区修改为东八区

RUN apk add --no-cache tzdata

ENV TZ=Asia/Shanghai

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone

ENV LANG=C.UTF-8

RUN set -eux && mkdir -p /data

ADD skywalking-agent /data/agent

WORKDIR /

[root@tools agent]# docker build -t harbor.xxx.cn/skywalking/agent-sidecar:8.9.0 .agent接入微服务

这里简单的接入eureka getway等项目

eureka Dockerfile

FROM 10.133.193.241/base/centos7:base

COPY eureka.jar /home

ENV JVM_OPTS="-Xss256k -XX:MaxRAMPercentage=80.0 -Duser.timezone=Asia/Shanghai -Djava.security.egd=file:/dev/./urandom"

ENV JAVA_OPTS=""

EXPOSE 8081

WORKDIR /home

ENTRYPOINT [ "sh", "-c", "java $JVM_OPTS $JAVA_OPTS -jar eureka.jar"]部署eureka

[root@k8s01 ~]# cat eureka-finance5-test.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: eureka-finance5-test

namespace: skywalking

labels:

app: eureka-finance5-test

spec:

replicas: 1

selector:

matchLabels:

app: eureka-finance5-test

template:

metadata:

labels:

app: eureka-finance5-test

spec:

imagePullSecrets:

- name: harborsecret

initContainers:

- name: sidecar

image: harbor.xxx.cn/skywalking/agent-sidecar:8.9.0 # 容器镜像,包含静态资源文件

imagePullPolicy: Always

command: ["cp", "-r", "/data/agent", "/sidecar"]

volumeMounts:

- name: sidecar

mountPath: /sidecar

containers:

- name: eureka-finance5-test

image: harbor.xxx.cn/itsm/eureka:finance5-test

imagePullPolicy: Always

env:

- name: JAVA_OPTS

value: -javaagent:/sidecar/agent/skywalking-agent.jar

- name: SW_AGENT_NAME

value: eureka-finance5-test

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES #oap地址

value: oap-svc:11800

resources:

limits:

memory: "1Gi"

requests:

memory: "1Gi"

ports:

- name: http

containerPort: 8081

protocol: TCP

volumeMounts:

- name: date

mountPath: /etc/localtime

- name: sidecar

mountPath: /sidecar

volumes:

- name: date

hostPath:

path: /etc/localtime

- name: sidecar #共享agent文件夹

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: eureka-finance5-test-svc

namespace: skywalking

labels:

app: eureka-finance5-test

spec:

sessionAffinity: "ClientIP"

ports:

- name: http

port: 8081

protocol: TCP

targetPort: 8081

nodePort: 30835

selector:

app: eureka-finance5-test

type: NodePort

[root@k8s01 ~]# kubectl apply -f eureka-finance5-test.yaml 根据上述步骤一一部署项目

[root@k8s01 ~]# kubectl get -n skywalking po

NAME READY STATUS RESTARTS AGE

basics-finance5-test-7665cf7f6d-ndm2n 1/1 Running 0 24h

es-0 1/1 Running 0 2d23h

es-1 1/1 Running 0 2d23h

es-2 1/1 Running 0 2d23h

eureka-finance5-test-58db7dbb78-szjrq 1/1 Running 0 24h

formflow-finance5-test-57bdfc768-sxzb5 1/1 Running 0 23h

getway-finance5-test-6dd6d9f864-lblpb 1/1 Running 0 24h

oap-7fdfb46778-szvc4 1/1 Running 0 45h

solr-finance5-test-9c9d77fd4-vnp6h 1/1 Running 0 23h

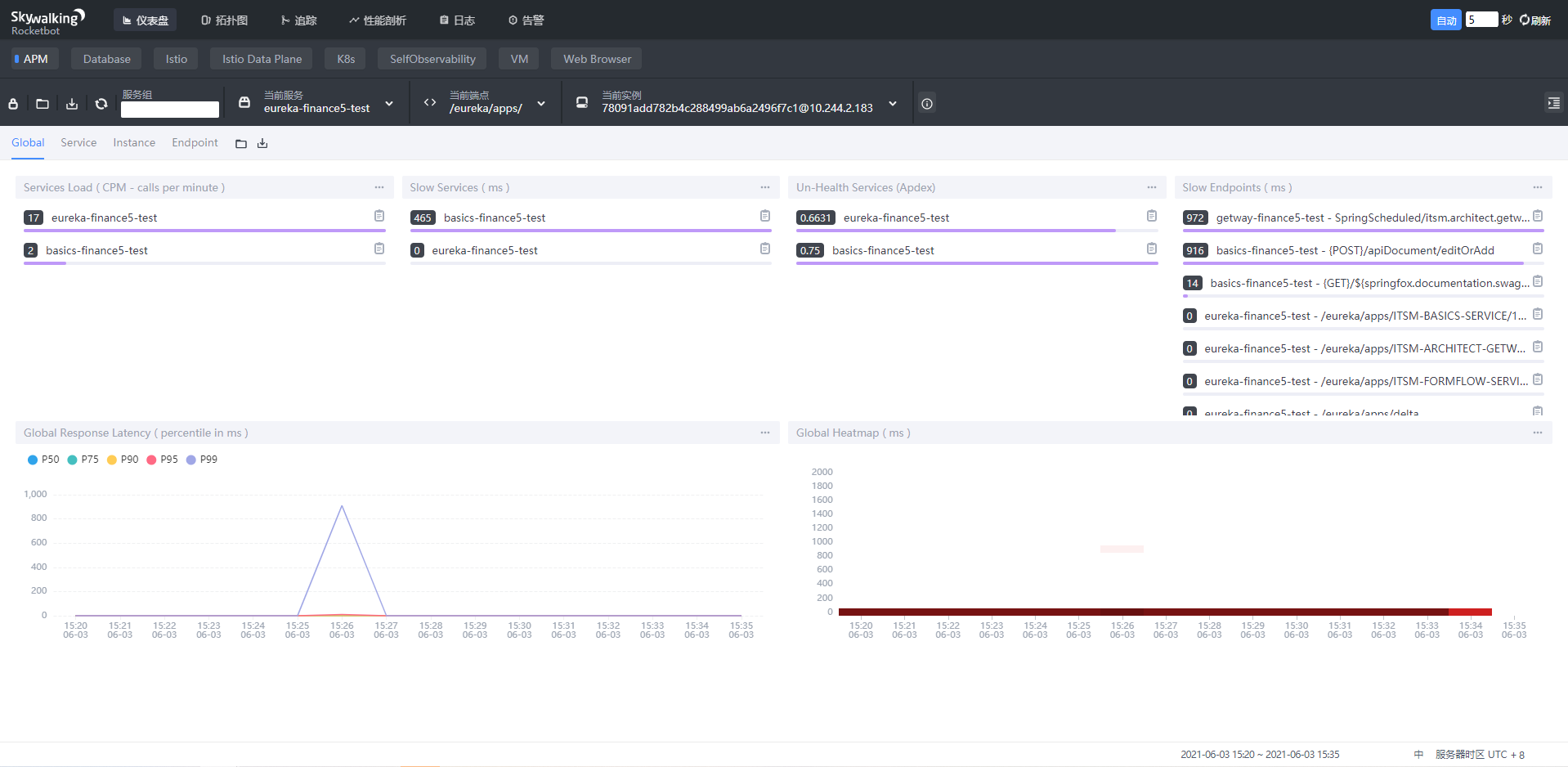

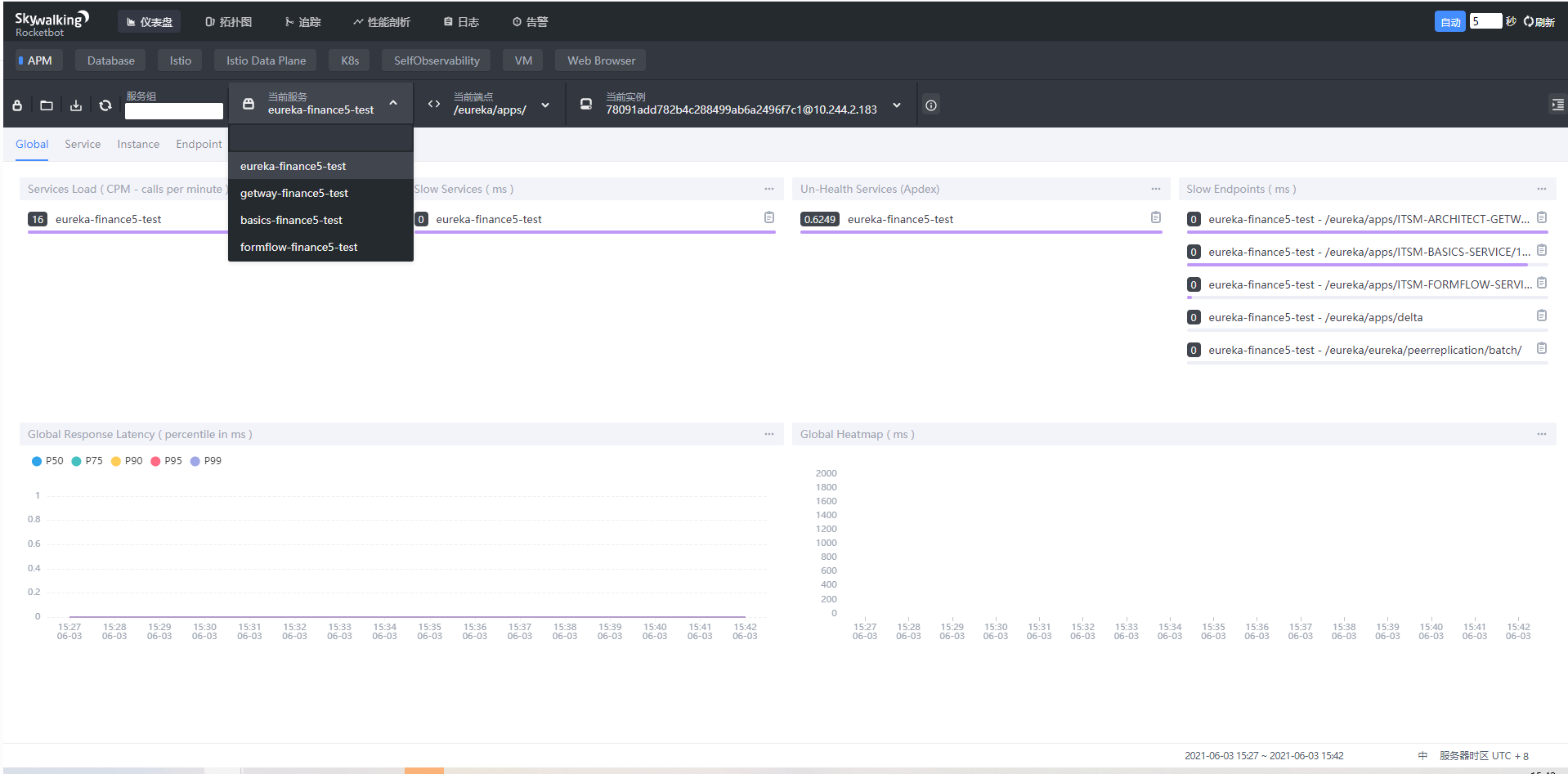

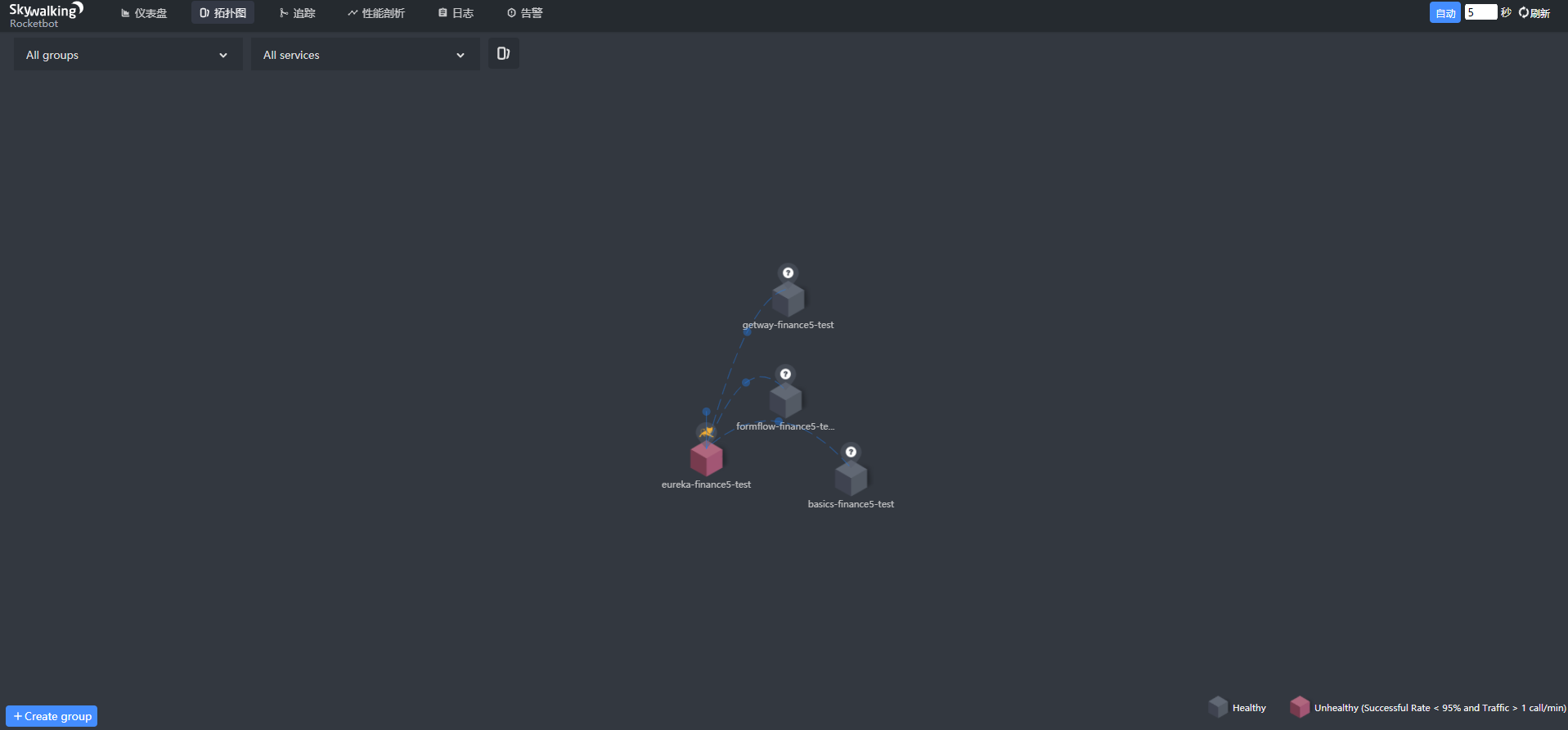

ui-6476779bb4-k4xgt 1/1 Running 0 28h访问UI查看

版权声明:

本站所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自

爱吃可爱多!

喜欢就支持一下吧

打赏

微信

微信

支付宝

支付宝

微信

微信

支付宝

支付宝