Kubernetes搭建EFLK日志系统

系统环境

kubernetes版本:1.20.0

elasticsearch版本:7.6.2

logstash版本:7.6.2

Fluentd版本:3.4.0

Filebeat版本:7.6.2

zookeeper版本:3.5.9

kafka版本:2.4.1

Elasticsearch是一个实时的、分布式的可扩展的搜索引擎,允许进行全文、结构化搜索,它通常用于索引和搜索大量日志数据,也可用于搜索许多不同类型的文档

Elasticsearch通常与 Kibana一起部署,Kibana是Elasticsearch的一个功能强大的数据可视化 Dashboard,Kibana允许你通过web界面来浏览Elasticsearch日志数据

Fluentd是一个流行的开源数据收集器,在Kubernetes集群节点上安装Fluentd,通过获取容器日志文件、过滤和转换日志数据,然后将数据传递到Elasticsearch集群,在该集群中对其进行索引和存储

对于大规模集群来说,日志数据量是非常巨大的,如果通过Fluentd将日志输出Elasticsearch,对ES来说压力是非常巨大的,可以在中间加一层消息中间件来缓解ES的压力,一般情况下会使用Kafka

Logstash可以动态统一不同来源的数据,并将数据标准化到您选择的目标输出。它提供了大量插件,可帮助我们解析,丰富,转换和缓冲任何类型的数据,本文中使用来做过滤及根据pod的label来创建索引,当然Fluentd也可以直接一步完成

部署Elasticsearch集群

ES设置账号密码可参考ELK设置密码

[root@k8s01 efk]# vim elasticsearch.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es

namespace: tools-env

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

initContainers:

- name: increase-vm-max-map

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

imagePullPolicy: IfNotPresent

ports:

- name: rest

containerPort: 9200

- name: inter

containerPort: 9300

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: keystore

mountPath: /usr/share/elasticsearch/config/elastic-certificates.p12

readOnly: true

subPath: elastic-certificates.p12

- name: localtime

mountPath: /etc/localtime

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.initial_master_nodes

value: "es-0,es-1,es-2"

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: discovery.seed_hosts

value: "elasticsearch"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

- name: network.host

value: "0.0.0.0"

- name: "xpack.security.enabled"

value: "false"

value: "true"

- name: xpack.security.transport.ssl.enabled

value: "true"

- name: xpack.security.transport.ssl.verification_mode

value: "certificate"

- name: xpack.security.transport.ssl.keystore.path

value: "/usr/share/elasticsearch/config/elastic-certificates.p12"

- name: xpack.security.transport.ssl.truststore.path

value: "/usr/share/elasticsearch/config/elastic-certificates.p12"

volumes:

- name: keystore

secret:

secretName: es-keystore

defaultMode: 0444

- name: localtime

hostPath:

path: /etc/localtime

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 50Gi

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: tools-env

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch-svc

namespace: tools-env

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

ports:

- port: 9200

name: rest

nodePort: 30920

- port: 9300

name: inter-node

nodePort: 30930

type: NodePort部署kafka集群

本文这里就不在复述部署过程,可参考Kubernetes部署Zookeeper Kafka集群

部署Fluentd

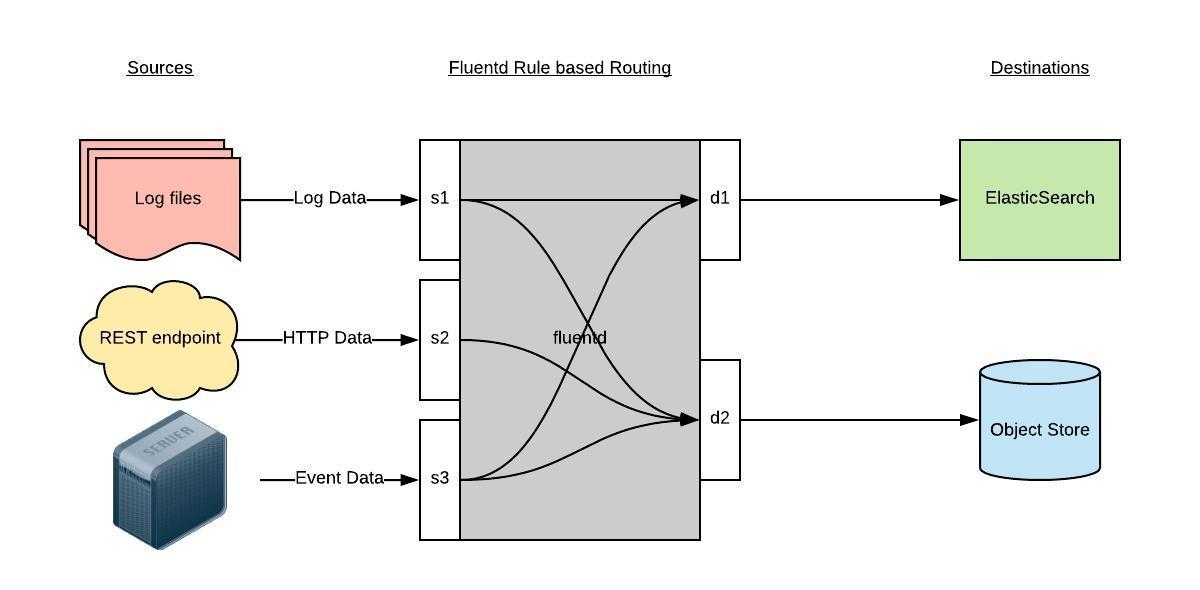

Fluentd 通过一组给定的数据源抓取日志数据,处理后(转换成结构化的数据格式)将它们转发给其他服务,比如 Elasticsearch、对象存储等等。Fluentd 支持超过300个日志存储和分析服务,主要运行步骤如下:

首先 Fluentd 从多个日志源获取数据

结构化并且标记这些数据

然后根据匹配的标签将数据发送到多个目标服务去

部署fluentd配置文件

[root@k8s01 efk]# vim fluentd-config.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-conf

namespace: tools-env

data:

containers.input.conf: |-

<source>

@id fluentd-containers.log

@type tail # Fluentd 内置的输入方式,其原理是不停地从源文件中获取新的日志

path /var/log/containers/*.log # Docker 容器日志路径

pos_file /var/log/es-containers.log.pos # 记录读取的位置

tag raw.kubernetes.* # 设置日志标签

read_from_head true # 从头读取

<parse> # 多行格式化成JSON

# 可以使用我们介绍过的 multiline 插件实现多行日志

@type multi_format # 使用 multi-format-parser 解析器插件

<pattern>

format json # JSON解析器

time_key time # 指定事件时间的时间字段

time_format %Y-%m-%dT%H:%M:%S.%NZ # 时间格式

</pattern>

<pattern>

format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/

time_format %Y-%m-%dT%H:%M:%S.%N%:z

</pattern>

</parse>

</source>

# 在日志输出中检测异常(多行日志),并将其作为一条日志转发

# https://github.com/GoogleCloudPlatform/fluent-plugin-detect-exceptions

<match raw.kubernetes.**> # 匹配tag为raw.kubernetes.**日志信息

@id raw.kubernetes

@type detect_exceptions # 使用detect-exceptions插件处理异常栈信息

remove_tag_prefix raw # 移除 raw 前缀

message log

multiline_flush_interval 5

</match>

<filter **> # 拼接日志

@id filter_concat

@type concat # Fluentd Filter 插件,用于连接多个日志中分隔的多行日志

key message

multiline_end_regexp /\n$/ # 以换行符“\n”拼接

separator ""

</filter>

<filter kubernetes.**>

@type concat

key message

format_firstline /^\[/ # 匹配Java多行日志的正则表达式,以"at"开头的行为一条日志

multiline_end_regexp /^at/

separator ""

flush_interval 5s

</filter>

# 添加 Kubernetes metadata 数据

<filter kubernetes.**>

@id filter_kubernetes_metadata

@type kubernetes_metadata

</filter>

# 修复 ES 中的 JSON 字段

# 插件地址:https://github.com/repeatedly/fluent-plugin-multi-format-parser

<filter kubernetes.**>

@id filter_parser

@type parser # multi-format-parser多格式解析器插件

key_name log # 在要解析的日志中指定字段名称

reserve_data true # 在解析结果中保留原始键值对

remove_key_name_field true # key_name 解析成功后删除字段

<parse>

@type multi_format

<pattern>

format json

</pattern>

<pattern>

format none

</pattern>

</parse>

</filter>

# 删除一些多余的属性

<filter kubernetes.**>

@type record_transformer

remove_keys $.docker.container_id,$.kubernetes.container_image_id,$.kubernetes.pod_id,$.kubernetes.namespace_id,$.kubernetes.master_url,$.kubernetes.labels.pod-template-hash

</filter>

# 只保留具有logging=true标签的Pod日志

<filter kubernetes.**>

@id filter_log

@type grep

<regexp>

key $.kubernetes.labels.logging

pattern ^true$

</regexp>

</filter>

###### 监听配置,一般用于日志聚合用 ######

forward.input.conf: |-

# 监听通过TCP发送的消息

<source>

@id forward

@type forward

</source>

output.conf: |-

<match **>

@id kafka

@type kafka2

@log_level info

brokers kafka-0.kafka-hs.tools-env.svc.cluster.local:9092,kafka-1.kafka-hs.tools-env.svc.cluster.local:9092,kafka-2.kafka-hs.tools-env.svc.cluster.local:9092

use_event_time true

topic_key k8slog

default_topic messages #注意kafka中消费使用的是这个topic

<buffer k8slog>

@type file

path /var/log/td-agent/buffer/td

flush_interval 3s

</buffer>

<format>

@type json

</format>

required_acks -1

compression_codec gzip

</match>

[root@k8s01 efk]# kubectl apply -f fluentd-config.yaml

configmap/fluentd-conf configured 如果不通过logstash来过滤的话,直接fluentd过滤配置如下:

[root@k8s01 efk]# vim fluentd-config.yaml

...

<filter kube.**>

@type kubernetes_metadata

</filter>

...

output.conf: |-

<match kubernetes.**>

@id elasticsearch_dynamic

@type elasticsearch_dynamic

@log_level info

type_name _doc

include_tag_key true

host elasticsearch

port 9200

user elastic

password VpzE6s!muV

logstash_format true

logstash_prefix prod-${record['kubernetes']['labels']['app']}

<buffer>

@type file

path /var/log/fluentd-buffers/kubernetes-dynamic.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

total_limit_size 500M

overflow_action block

</buffer>

</match>

<match **>

@id elasticsearch

@type elasticsearch

@log_level info

type_name _doc

include_tag_key true

host elasticsearch

port 9200

user elastic

password VpzE6s!muV

logstash_format true

<buffer>

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

total_limit_size 500M

overflow_action block

</buffer>

</match>部署fluentd应用

Fluentd 的日志数据输出到 Kafka 了,只需要将 Fluentd 配置中的 更改为使用 Kafka 插件即可,但是在Fluentd中输出到Kafka,需要使用到fluent-plugin-kafka 插件,所以需要自定义下 Docker镜像,最简单的做法就是在上面Fluentd镜像的基础上新增kafka插件即可,Dockerfile 文件如下所示:

FROM quay.io/fluentd_elasticsearch/fluentd:v3.4.0

RUN echo "source 'https://mirrors.tuna.tsinghua.edu.cn/rubygems/'" > Gemfile && gem install bundler

RUN gem install fluent-plugin-kafka -v 0.17.5 --no-document[root@k8s01 efk]# vim fluentd-ds.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: tools-env

labels:

k8s-app: fluentd

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

labels:

k8s-app: fluentd

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

labels:

k8s-app: fluentd

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: fluentd

namespace: tools-env

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: tools-env

labels:

app: fluentd

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

kubernetes.io/cluster-service: "true"

spec:

serviceAccountName: fluentd

imagePullSecrets:

- name: harborsecret

containers:

- name: fluentd

#image: quay.io/fluentd_elasticsearch/fluentd:v3.4.0

image: harbor.xxx.cn/tools/fluentd:v3.4.0

volumeMounts:

- name: fluentconfig

mountPath: /etc/fluent/config.d

- name: varlog

mountPath: /var/log

volumes:

- name: fluentconfig

configMap:

name: fluentd-conf

- name: varlog

hostPath:

path: /var/log

[root@k8s01 efk]# kubectl apply -f fluentd-ds.yaml

serviceaccount/fluentd created

clusterrole.rbac.authorization.k8s.io/fluentd created

clusterrolebinding.rbac.authorization.k8s.io/fluentd created

daemonset.apps/fluentd created

[root@k8s01 efk]# kubectl get -n tools-env pod -l app=fluentd

NAME READY STATUS RESTARTS AGE

fluentd-292ls 1/1 Running 0 2m48s

fluentd-4m2kb 1/1 Running 0 2m48s

fluentd-5549g 1/1 Running 0 2m48s

fluentd-8z5lx 1/1 Running 0 2m48s

fluentd-p4p57 1/1 Running 0 2m48s

fluentd-xc847 1/1 Running 0 2m48s 部署Filebeat

Filebeat配置文件

[root@k8s01 efk]# vim filebeat-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: tools-env

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.inputs:

- type: container

paths:

- /var/log/containers/*.log

multiline.pattern: '^\[' #java多行错误日志匹配成一行

multiline.negate: true

multiline.match: after

multiline.timeout: 30

fields:

service: k8s-log

processors:

- add_kubernetes_metadata:

#添加k8s属性

in_cluster: true

default_indexers.enabled: true

default_matchers.enabled: true

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

- drop_fields:

fields: ["host", "tags", "ecs", "log", "prospector", "agent", "input", "beat", "offset"]

ignore_missing: true

- drop_event.when:

not:

contains:

kubernetes.labels.logging: "true"

#只收集具有logging=true标签的日志

output.kafka:

enabled: true

hosts: ["kafka-0.kafka-hs.tools-env.svc.cluster.local:9092","kafka-1.kafka-hs.tools-env.svc.cluster.local:9092","kafka-2.kafka-hs.tools-env.svc.cluster.local:9092"]

topic: k8slogs部署Filebeat应用

需特别注意,runtime是containerd或者是docker时,挂载容器日志会有区别,/var/log/containers /var/lib/docker/containers

[root@k8s01 efk]# vim filebeat-ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: tools-env

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat:7.6.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch

- name: ELASTICSEARCH_PORT

value: "9200"

- name: ELASTICSEARCH_USERNAME

value: elastic

- name: ELASTICSEARCH_PASSWORD

value: VpzE6s!muV

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: data

mountPath: /usr/share/filebeat/data

- name: varlog

mountPath: /var/log

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0640

name: filebeat-config

- name: varlog

hostPath:

path: /var/log

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: tools-env

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: tools-env

labels:

k8s-app: filebeat部署logstash

logstash配置文件

[root@k8s01 efk]# vim logstash-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-config

namespace: tools-env

labels:

app: logstash

data:

logstash.conf: |-

input {

kafka {

bootstrap_servers => "kafka-0.kafka-hs.tools-env.svc.cluster.local:9092,kafka-1.kafka-hs.tools-env.svc.cluster.local:9092,kafka-2.kafka-hs.tools-env.svc.cluster.local:9092"

codec => json

consumer_threads => 3

topics => ["messages"]

}

}

filter {

grok {

match => { "message" => "(%{TIMESTAMP_ISO8601:logdatetime} %{LOGLEVEL:level} %{GREEDYDATA:logmessage})|%{GREEDYDATA:logmessage}" }

remove_field => [ "message" ]

remove_field => [ "agent" ]

remove_field => [ "ecs" ]

remove_field => [ "tags" ]

}

}

output {

elasticsearch {

hosts => [ "elasticsearch:9200" ]

user => "elastic"

password => "VpzE6s!muV"

index => "prod-%{[kubernetes][labels][app]}-%{+YYYY_MM_dd}"

}

}

logstash.yml: |-

http.host: 0.0.0.0

#如果启用了xpack,需要做如下配置

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.hosts: ["http://elasticsearch:9200"]

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "VpzE6s!muV"

[root@k8s01 efk]# kubectl apply -f logstash-config.yaml

configmap/logstash-config configured logstash服务

[root@k8s01 efk]# vim logstash.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash

namespace: tools-env

spec:

replicas: 1

selector:

matchLabels:

app: logstash

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

image: logstash:7.6.2

imagePullPolicy: IfNotPresent

volumeMounts:

- name: config

mountPath: /usr/share/logstash/pipeline/logstash.conf

subPath: logstash.conf

- name: config

mountPath: /usr/share/logstash/config/logstash.yml

subPath: logstash.yml

command:

- "/bin/sh"

- "-c"

- "/usr/share/logstash/bin/logstash -f /usr/share/logstash/pipeline/logstash.conf"

volumes:

- name: config

configMap:

name: logstash-config

---

apiVersion: v1

kind: Service

metadata:

labels:

app: logstash

name: logstash

namespace: tools-env

spec:

ports:

- port: 5044

targetPort: 5044

selector:

app: logstash

[root@k8s01 efk]# kubectl apply -f logstash.yaml

deployment.apps/logstash created

service/logstash created 部署Kibana

kibana配置文件

[root@k8s01 efk]# vim kibana-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: kibana-config

namespace: tools-env

labels:

app: kibana

data:

kibana.yml: |-

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

elasticsearch.username: "elastic"

elasticsearch.password: "VpzE6s!muV"

xpack.monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: "zh-CN"kibana服务

[root@k8s01 efk]# vim kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: tools-env

labels:

app: kibana

spec:

ports:

- port: 5601

nodePort: 30561

type: NodePort

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: tools-env

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: kibana:7.6.2

imagePullPolicy: IfNotPresent

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch:9200

ports:

- containerPort: 5601

volumeMounts:

- name: config

mountPath: /usr/share/kibana/config/kibana.yml

subPath: kibana.yml

- name: localtime

mountPath: /etc/localtime

volumes:

- name: config

configMap:

name: kibana-config

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

[root@k8s01 efk]# kubectl apply -f kibana-config.yaml

configmap/kibana-config created

[root@k8s01 efk]# kubectl apply -f kibana.yaml

service/kibana created

deployment.apps/kibana created 查看索引

Elastalert告警

elastalert告警详细配置可参考博客ELFK elastalert告警通知

微信

微信

支付宝

支付宝