Prometheus结合StateMetrics+cAdvisor监控k8s集群服务

系统环境

- 操作系统: CentOS 7.6

- Docker 版本: 19.03.5

- Prometheus 版本: 2.36.0

- Kubernetes 版本: 1.20.0

- Kube State Metrics 版本: 2.0.0

cAdvisor、KubeStateMetrics简介

cAdvisor

cAdvisor (Container Advisor) 是 Google 开源的一个容器监控工具,可用于对容器资源的使用情况和性能进行监控。它以守护进程方式运行,用于收集、聚合、处理和导出正在运行容器的有关信息。具体来说,该组件对每个容器都会记录其资源隔离参数、历史资源使用情况、完整历史资源使用情况的直方图和网络统计信息,cAdvisor 本身就对 Docker 容器支持,并且还对其它类型的容器尽可能的提供支持,力求兼容与适配所有类型的容器,由于其监控的实用性,Kubernetes 已经默认将其与 Kubelet 融合,所以我们无需再单独部署 cAdvisor 组件来暴露节点中容器运行的信息,直接使用 Kubelet 组件提供的指标采集地址即可

KubeStateMetrics

Kube State Metrics是一个简单的服务,该服务通过监听 Kubernetes API 服务器来生成不同资源的状态的 Metrics 数据,它不关注 Kubernetes 节点组件的运行状况,而是关注集群内部各种资源对象 (例如 deployment、node 和 pod) 的运行状况

Kube State Metrics 是直接从 Kubernetes API 对象中获取生成的指标数据,这个过程中不会对指标数据进行修改。这样可以确保该组件提供的功能具有与 Kubernetes API 对象本身具有相同级别的稳定性。反过来讲,这意味着在某些情况下 Kube State Metrics 的 metrics 数据可能不会显示与 Kubectl 完全相同的值,因为 Kubectl 会应用某些启发式方法来显示可理解的消息。Kube State Metrics 公开了未经 Kubernetes API 修改的原始数据,这样用户可以拥有所需的所有数据,并根据需要执行启发式操作

由于该组件 Kubernetes 并未与其默认集成在一起,所以需要我们单独部署

部署KubeStateMetrics RBAC

由于 Kube State Metrics 组件需要通过与 kube-apiserver 连接,并调用相应的接口去获取 kubernetes 集群数据,这个过程需要 Kube State Metrics 组件拥有一定的权限才能成功执行这些操作,在 Kubernetes 中默认使用 RBAC 方式管理权限。所需要创建相应的 RBAC资源来提供该组件使用,这里创建 KubeStateMetrics RBAC文件kube-state-metrics-rbac.yaml(这里使用kube-system命名空间,如果你不想部署在这个命名空间,请提前修改里面的namespac )

[root@k8s01 kube-state-metrics]# vim kube-state-metrics-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

k8s-app: kube-state-metrics

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

k8s-app: kube-state-metrics

rules:

- apiGroups: [""]

resources: ["configmaps","secrets","nodes","pods",

"services","resourcequotas",

"replicationcontrollers","limitranges",

"persistentvolumeclaims","persistentvolumes",

"namespaces","endpoints"]

verbs: ["list","watch"]

- apiGroups: ["extensions"]

resources: ["daemonsets","deployments","replicasets"]

verbs: ["list","watch"]

- apiGroups: ["apps"]

resources: ["statefulsets","daemonsets","deployments","replicasets"]

verbs: ["list","watch"]

- apiGroups: ["batch"]

resources: ["cronjobs","jobs"]

verbs: ["list","watch"]

- apiGroups: ["autoscaling"]

resources: ["horizontalpodautoscalers"]

verbs: ["list","watch"]

- apiGroups: ["authentication.k8s.io"]

resources: ["tokenreviews"]

verbs: ["create"]

- apiGroups: ["authorization.k8s.io"]

resources: ["subjectaccessreviews"]

verbs: ["create"]

- apiGroups: ["policy"]

resources: ["poddisruptionbudgets"]

verbs: ["list","watch"]

- apiGroups: ["certificates.k8s.io"]

resources: ["certificatesigningrequests"]

verbs: ["list","watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses","volumeattachments"]

verbs: ["list","watch"]

- apiGroups: ["admissionregistration.k8s.io"]

resources: ["mutatingwebhookconfigurations","validatingwebhookconfigurations"]

verbs: ["list","watch"]

- apiGroups: ["networking.k8s.io"]

resources: ["networkpolicies","ingresses"]

verbs: ["list","watch"]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["list","watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

app: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

[root@k8s01 kube-state-metrics]# kubectl apply -f kube-state-metrics-rbac.yaml

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

部署KubeStateMetrics

创建 Kube State Metrics 的部署文件 kube-state-metrics-deploy.yaml

[root@k8s01 kube-state-metrics]# vim kube-state-metrics-deploy.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

k8s-app: kube-state-metrics

app.kubernetes.io/name: kube-state-metrics ##不能删除此注解,该注解用于Prometheus自动发现

spec:

type: ClusterIP

ports:

- name: http-metrics

port: 8080

targetPort: 8080

- name: telemetry

port: 8081

targetPort: 8081

selector:

k8s-app: kube-state-metrics

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

k8s-app: kube-state-metrics

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kube-state-metrics

template:

metadata:

labels:

k8s-app: kube-state-metrics

spec:

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: bitnami/kube-state-metrics:2.0.0

imagePullPolicy: IfNotPresent

securityContext:

runAsUser: 65534

ports:

- name: http-metrics ##用于公开kubernetes的指标数据的端口

containerPort: 8080

- name: telemetry ##用于公开自身kube-state-metrics的指标数据的端口

containerPort: 8081

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

[root@k8s01 kube-state-metrics]# kubectl apply -f kube-state-metrics-deploy.yaml

service/kube-state-metrics created

deployment.apps/kube-state-metrics created

访问KubeStateMetrics暴露的指标数据

部署完Kube State Metrics 组件后,可以在Kubernetes Master节点中执行下面命令,测试是否能正常访问到KubeStateMetrics 暴露的 /metrics 接口获取数据

[root@k8s01 kube-state-metrics]# curl -kL $(kubectl get service -n kube-system | grep kube-state-metrics |awk '{ print $3 }'):8080/metrics

...

kube_replicaset_labels{namespace="test-env",replicaset="itsm-internet-7fc9f857cc"} 1

kube_replicaset_labels{namespace="test-env",replicaset="itsm-ssec-67d76b75c6"} 1

kube_replicaset_labels{namespace="poc-env",replicaset="itsm2785-59699495f6"} 1

kube_replicaset_labels{namespace="poc-env",replicaset="itsm9166-6459d596f5"} 1

kube_replicaset_labels{namespace="poc-env",replicaset="itsm3671-668d466544"} 1

kube_replicaset_labels{namespace="test-env",replicaset="itsm-itss-6db5d4455"} 1

kube_replicaset_labels{namespace="test-env",replicaset="itsm-finance5-95b5b4f96"} 1

kube_replicaset_labels{namespace="poc-env",replicaset="itsm5532-7f95849f8d"} 1

kube_replicaset_labels{namespace="poc-env",replicaset="itsm4482-76b9bb6f7"} 1

kube_replicaset_labels{namespace="monitoring",replicaset="prometheus-5d4cf98fc8"} 1

kube_replicaset_labels{namespace="test-env",replicaset="jodc-test-dfc5fcd45"} 1

kube_replicaset_labels{namespace="poc-env",replicaset="itsm-cmignc-6dff49b66c"} 1

kube_replicaset_labels{namespace="archery",replicaset="goinception-658888c557"} 1

...

Prometheus中添加采集cAdvisor配置

Prometheus 添加 cAdvisor 配置

由于 Kubelet 中已经默认集成 cAdvisor 组件,所以无需部署该组件。不过由于监控的需要,还需在 Prometheus中将采集cAdvisor的配置添加进去,在Prometheus配置文件中添加cAdvisor指标数据采集配置,配置内容如下所示

- job_name: 'kubernetes-cadvisor'

scheme: https

metrics_path: /metrics/cadvisor

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

## 下面配置只是用于适配对应的 Grafana Dashboard 图表(这里用编号13105图表),不同的图表配置不同,不过多介绍

metric_relabel_configs:

- source_labels: [instance]

separator: ;

regex: (.+)

target_label: node

replacement: $1

action: replace

- source_labels: [pod_name]

separator: ;

regex: (.+)

target_label: pod

replacement: $1

action: replace

- source_labels: [container_name]

separator: ;

regex: (.+)

target_label: container

replacement: $1

action: replace

上面部分参数简介如下:

- kubernetes_sd_configs: 设置发现模式为 Kubernetes 动态服务发现

- kubernetes_sd_configs.role: 指定 Kubernetes 的服务发现模式,这里设置为 node 的服务发现模式,该模式下会调用 kubelet 中的接口获取指标数据,能够获取到各个 Kubelet 的服务器地址和节点名称等信息

- tls_config.ca_file: 用于指定连接 kube-apiserver 的证书文件

- bearer_token_file: 用于指定连接 kube-apiserver 的鉴权认证的 token 串文件

- relabel_configs: 用于对采集的标签进行重新标记

Prometheus添加KubeStateMetric 配置

Prometheus添加KubeStateMetrics配置

经过上面操作,已经在 Kubernetes中部署了Kube State Metrics,接下来就需要在 Prometheus配置文件中添加kube-state-metrics 指标数据采集配置,配置内容如下所示

- job_name: "kube-state-metrics"

kubernetes_sd_configs:

- role: endpoints

## 指定kube-state-metrics组件所在的Namespace名称

namespaces:

names: ["kube-system"]

relabel_configs:

## 指定从 app.kubernetes.io/name 标签等于 kube-state-metrics 的 service 服务获取指标信息

- action: keep

source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]

regex: kube-state-metrics

## 下面配置也是为了适配 Grafana Dashboard 模板(编号13105图表)

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels: [__meta_kubernetes_namespace]

target_label: k8s_namespace

- action: replace

source_labels: [__meta_kubernetes_service_name]

target_label: k8s_sname

上面部分参数简介如下:

- kubernetes_sd_configs: 设置发现模式为 Kubernetes 动态服务发现

- kubernetes_sd_configs.role: 指定 Kubernetes 的服务发现模式,这里设置为 endpoints 的服务发现模式,该模式下会调用 kube-apiserver 中的接口获取指标数据。并且还限定只获取 kube-state-metrics 所在 - Namespace 的空间 kube-system 中的 Endpoints 信息

- kubernetes_sd_configs.namespace: 指定只在配置的 Namespace 中进行 endpoints 服务发现

- relabel_configs: 用于对采集的标签进行重新标记

Prometheus配置存入ConfigMap

将上面 Prometheus 中的配置存入到Kubernetes中的ConfigMap资源文件 prometheus-config.yaml中

[root@k8s01 prometheus]# cat prometheus-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

external_labels:

cluster: "kubernetes"

scrape_configs:

...

############################ kubernetes-cadvisor ##############################

- job_name: 'kubernetes-cadvisor'

scheme: https

metrics_path: /metrics/cadvisor

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

metric_relabel_configs:

- source_labels: [instance]

separator: ;

regex: (.+)

target_label: node

replacement: $1

action: replace

- source_labels: [pod_name]

separator: ;

regex: (.+)

target_label: pod

replacement: $1

action: replace

- source_labels: [container_name]

separator: ;

regex: (.+)

target_label: container

replacement: $1

action: replace

############################ kube-state-metrics ##############################

- job_name: "kube-state-metrics"

kubernetes_sd_configs:

- role: endpoints

namespaces:

names: ["kube-system"]

relabel_configs:

- action: keep

source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]

regex: kube-state-metrics

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels: [__meta_kubernetes_namespace]

target_label: k8s_namespace

- action: replace

source_labels: [__meta_kubernetes_service_name]

target_label: k8s_sname

[root@k8s01 prometheus]# kubectl apply -f prometheus-config.yaml

configmap/prometheus-config configured

重新加载 Prometheus 配置

[root@k8s01 prometheus]# curl -XPOST http://10.x.x.x:30089/-/reload

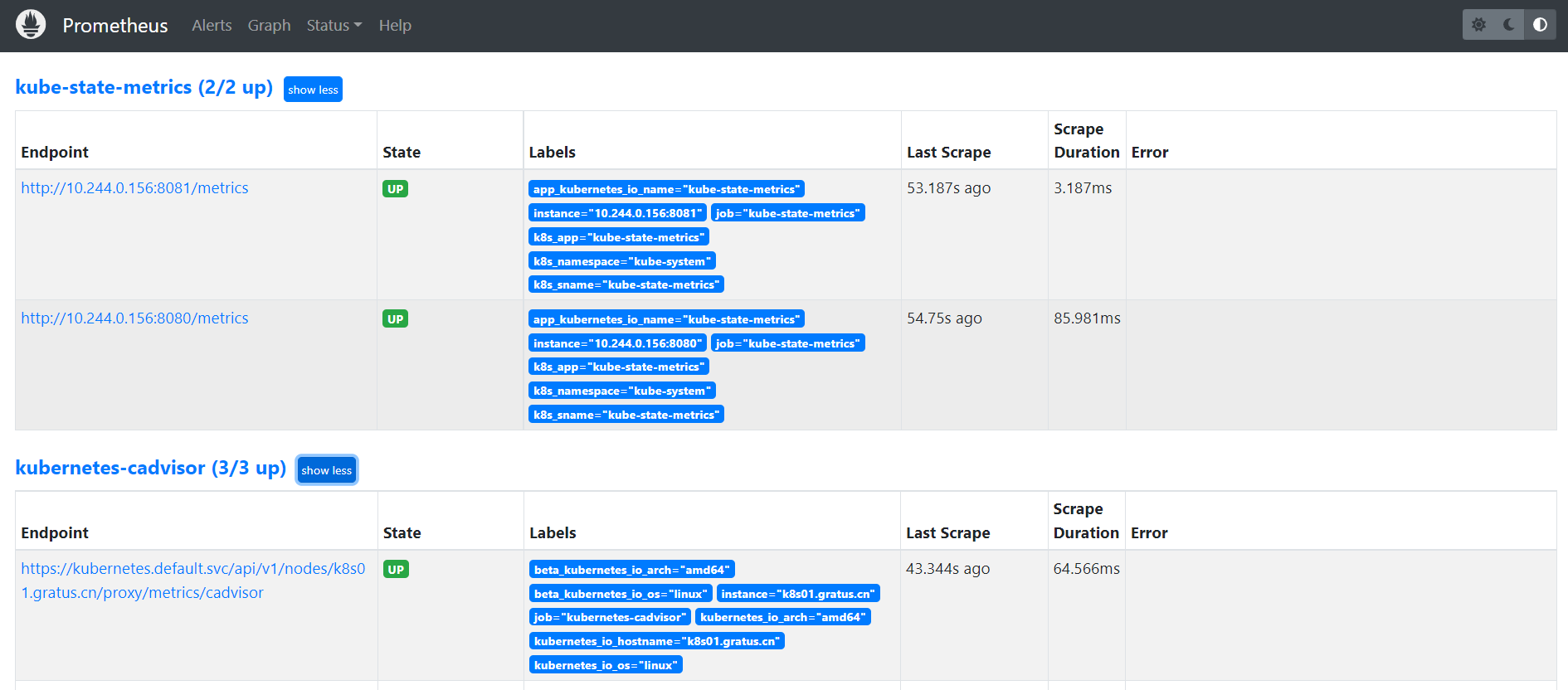

查看Prometheus UI

Grafana引入k8s服务监控看板

击 Grafana 左侧栏菜单,选择 Manage 菜单,进入后点击右上角 Import 按钮,设置 Import的ID号为13105的图表,引入Kubernetes监控模板,然后点击Load按钮进入配置数据库,选择使用Prometheus数据库,之后点击Import按钮进入看板:

微信

微信

支付宝

支付宝